Ever since Gradients launched its tournament system, something remarkable has been unfolding across Subnet 56. What began as a race to optimize training methods has evolved into a full-blown ecosystem of open competition, where the best ideas win, evolve, and compound.

Today, Gradients stands as the world’s leading AutoML platform — not because it locked away its best secrets, but because it opened them.

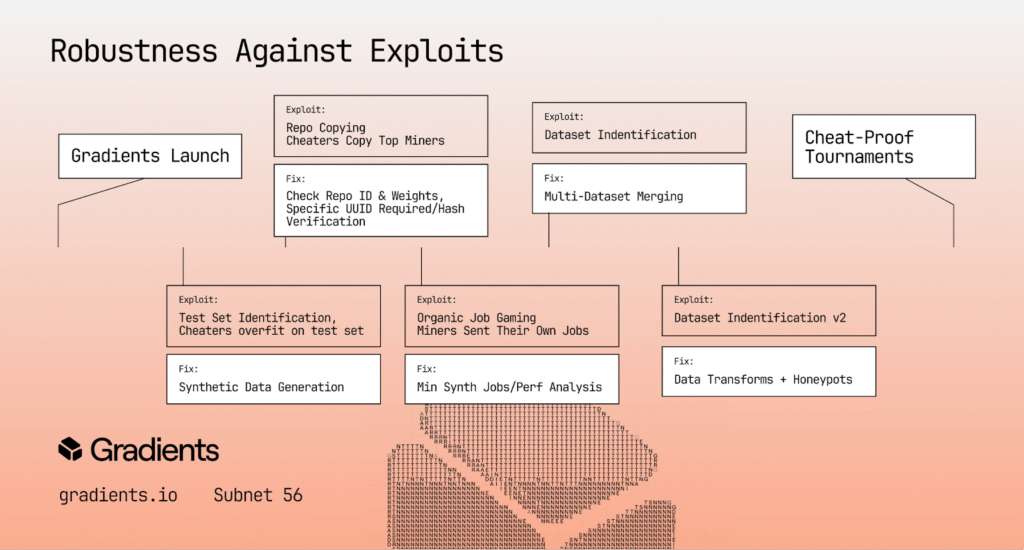

The Early Challenge: Exploits and Incentives

When the subnet launched, it quickly became clear that some miners would search for loopholes to win emissions without contributing real value. They copied others’ work, overfitted datasets, and found shortcuts that looked clever on paper but damaged the network’s integrity.

Gradients responded with a steady stream of innovations designed to close every gap – each update making the system more resilient, fair, and intelligent.

Step One: Building Synthetic Datasets

In the beginning, some miners discovered the original datasets and trained directly on the test data. Gradients fixed this by generating synthetic datasets using large language models that mimicked the same data distribution but were impossible to identify.

Now, miners were evaluated on both real and synthetic data. Anyone who performed suspiciously poorly on synthetic tests was immediately disqualified.

Step Two: Stopping the Copycats

Some miners started copying top performers by duplicating their public repositories. Gradients stopped this by assigning unique submission names, timestamps, and hash verifications to every model. Identical submissions were automatically detected and disqualified.

Soon after, miners tried to submit cloned repositories under slightly modified accounts. Gradients then introduced even stricter validation rules to ensure every submission came from an authentic build.

Step Three: Fixing Self-Mining

A few miners began submitting jobs only to process their own tasks, a way to game the reward system. The team countered this by setting minimum thresholds for synthetic job acceptance and monitoring performance across independent datasets. Miners who underperformed on these synthetic jobs were flagged and removed.

Step Four: Multi-Dataset Blending

To make dataset identification nearly impossible, Gradients started merging multiple datasets into complex hybrid tasks. This forced miners to generalize rather than overfit to a single source, improving model robustness and adaptability.

Step Five: Intelligent Data Augmentation

Gradients then took it further, generating unique datasets with randomized linguistic transformations that even top AI models couldn’t easily reverse-engineer. These included:

a. Randomized sentence structures

b. Inserted unique IDs and tokens

c. Word-level modifications like reversals, truncations, and repeats

d. Conditional transformations and case alterations

These small but clever changes ensured miners had to truly learn, not memorize.

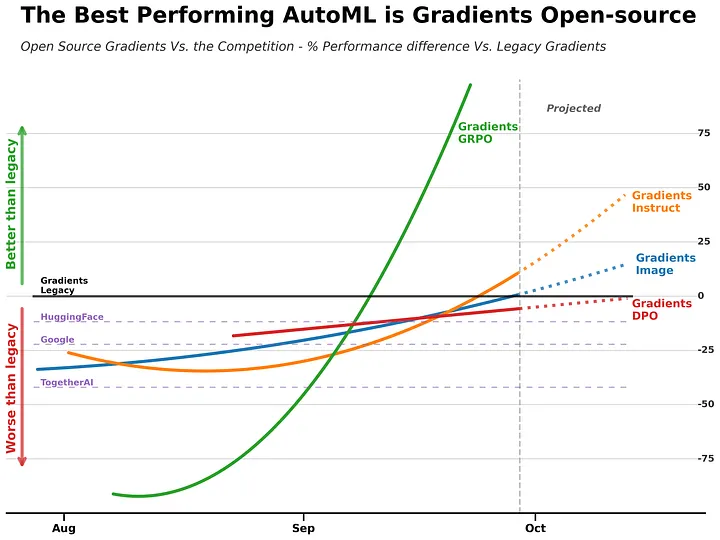

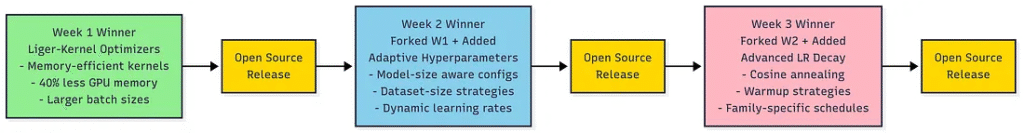

The Turning Point: Open-Source Tournaments

The real transformation came with the launch of Gradients’ open-source tournament system. Instead of hiding behind closed codebases, miners now compete publicly, registering their repositories and sharing their full training scripts when they win.

This shift changed everything. Open-source tournament miners have now reached, and even surpassed, the performance of Gradients’ legacy closed-source systems.

Why Open-Source Works

In traditional AI systems, breakthroughs stay hidden. In Gradients’ tournament model, every win becomes a foundation for the next innovation. When a miner wins, their method becomes public. The next competitor builds on it, improves it, and competes again.

It’s a virtuous cycle where progress compounds.

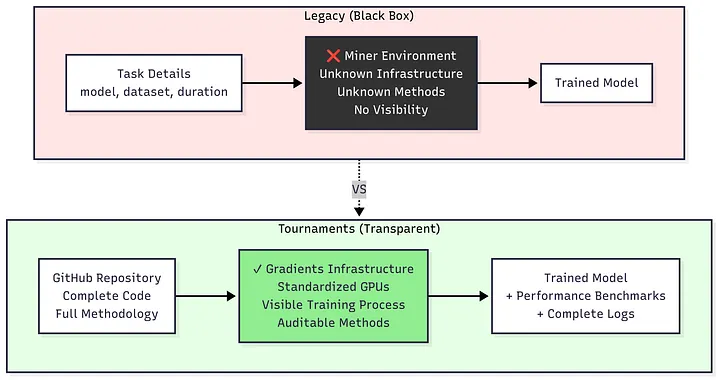

From Black Box to Transparent AI

For enterprises, this shift solved a major problem: trust. Instead of sending private data into opaque systems, they can now see exactly how models are trained, what datasets are used, and what performance metrics were achieved — all verifiable through open infrastructure.

The Transition: Two Weeks to a New Era

With open tournaments proving their worth, Gradients is now moving entirely to this model. Within two weeks, all legacy systems will be retired. Every client model will be trained using the latest tournament-winning approaches, hosted securely on Gradients’ infrastructure.

What It Means for Miners

Miners no longer need to run expensive infrastructure 24/7. Gradients provides the compute and the miners provide innovation. Every tournament produces a new champion whose code becomes the baseline for future rounds. This lowers entry barriers, expands participation, and accelerates collective improvement.

Incentives that Reward Real Innovation

The new system adopts a tokenomics model that pays miners based on how much they outperform others. The greater the performance margin, the higher the emissions reward. It’s not just about winning, it’s about winning decisively.

A New Model for AI Progress

Gradients’ journey from exploit prevention to open competition reveals something profound: decentralization doesn’t have to mean chaos. With the right incentives, it can create a system where integrity and innovation reinforce each other.

From fighting cheaters to building the world’s most transparent AutoML network, Gradients has proven one thing – the future of AI isn’t closed or controlled. It’s open, competitive, and fair.

Useful Resources

To navigate Subnet 56, visit the designated official channels:

Website: https://gradients.io/

X (Formerly Twitter): https://x.com/gradients_ai

Be the first to comment