As decentralized AI systems mature, a quiet bottleneck is becoming impossible to ignore: high-quality datasets do not scale the way compute does.

Inference can be parallelized, training can be distributed, but data generation, especially in open-ended domains, still struggles under one fundamental assumption: that every task must produce a single correct output.

On Bittensor’s Subnet 33 (ReadyAI), a new task design challenges that assumption directly.

The result is a miner primitive that treats structured variability as signal, not noise, unlocking a scalable path to dataset enrichment in adversarial, decentralized environments.

The Hidden Cost of Determinism

Most subnet designs rely on deterministic tasks for good reason. Fixed inputs, fixed outputs, and straightforward scoring make validator logic simple and robust.

This approach works well for:

a. Inference and benchmarking,

b. Compute execution and verification, and

c. Prediction and scoring tasks.

But enrichment tasks are fundamentally different; when miners are asked to enrich a document with research, context, or supplemental data, there is rarely one correct answer.

The value lies in relevance, perspective, and coverage, not reproducibility. Determinism collapses that richness and limits both scale and usefulness.

The challenge becomes structural: How can a decentralized network reward miners for producing diverse, valid outputs without sacrificing verifiability?

Introducing Persona-Conditioned Enrichment

Subnet 33’s approach is deceptively simple: fix the source, vary the lens.

Rather than asking miners to generate a single enrichment, the network conditions inference on a specific persona, or analytical perspective. Each persona represents a legitimate way of interrogating the same material.

A policy transcript examined by:

a. An opportunistic investor surfaces distress and rezoning signals,

b. A lender focuses on refinancing risk and capital structure, and

c. A risk manager evaluates exposure and concentration.

All outputs differ, all are valid, and none need to be identical.

This reframes non-determinism from randomness into controlled diversity, anchored by explicit constraints.

How Validation Works Without Ground Truth

The key to making this work is shifting what validators verify.

Instead of scoring against an expected output, Subnet 33 evaluates whether each submission satisfies a quality envelope:

a. Does the output reflect the assigned persona?

b. Are the generated queries grounded in the source document?

c. Do the queries execute and return meaningful results?

d. Is the output correctly structured and attributable?

This constraint-based validation allows miners to explore the output space freely while remaining accountable. Quality emerges from alignment, not duplication.

From Single Documents to Large-Scale Datasets

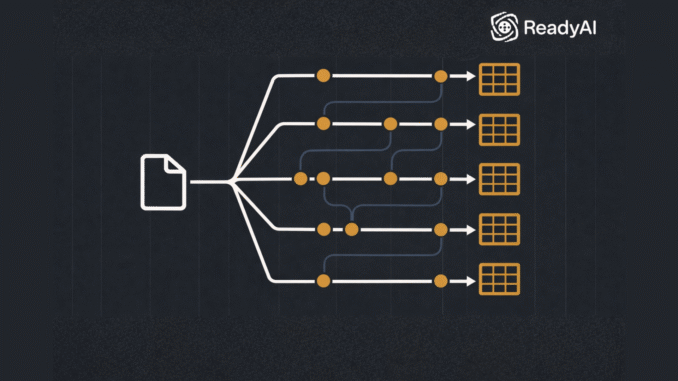

The scaling properties of this design are multiplicative rather than linear. A single source document, when processed through a library of personas, produces hundreds of distinct enrichment paths.

Each path generates queries, results, and structured metadata, all traceable back to the original source.

This means:

a. Finite documents yield effectively infinite enrichment,

b. Output volume grows by adding perspectives, not data sources, and

c. The same architecture generalizes across domains.

Subnet 33 currently applies this to real estate due to customer demand, but the primitive itself is domain-agnostic.

Training Data as a First-Class Output

One of the most compelling downstream uses is training data synthesis. As standards like llms.txt gain traction, large volumes of documentation become available in machine-readable form.

Enrichment tasks turn these static files into:

a. Persona-specific question and answer pairs,

b. Labeled retrieval datasets, and

c. Domain-adapted fine-tuning corpora.

A single documentation file can now produce training examples for developers, support engineers, compliance teams, and sales roles, without rewriting or duplicating the source material.

Why This Matters for Decentralized Networks

Non-deterministic enrichment solves a problem that deterministic subnets cannot: how to reward creativity and relevance without opening the door to unverifiable noise.

By embedding perspective directly into task design, ReadyAI (Subnet 33) introduces a primitive that scales with demand, supports adversarial validation, and produces data assets with real downstream value.

In practice, this means decentralized networks can move beyond verifying compute and begin manufacturing the datasets that modern AI systems actually need.

That shift may prove just as important as any gain in model size or inference speed.

Be the first to comment