By James Macgregor

Alignment was never a solved problem, but it was often treated like a static one, where models were evaluated in isolated snapshots. That assumption no longer holds. Agentic AI introduces interaction, feedback loops, and compounding dynamics at a new scale. Methods designed to certify alignment at fixed moments are increasingly mismatched to systems that never stop changing.

To keep up, evaluation has to operate more like the systems it observes – dynamic, incentive-shaped, persistent. Systems under constant iteration stabilise through feedback, not assertion. If alignment evolves, evaluation must evolve with it.

Aurelius, Subnet 37, is built around that premise: decentralised feedback applied to alignment itself. Yet understanding why that approach makes sense is intertwined with understanding the people behind it. Their combination of experience isn’t incidental; it’s exactly what the problem demands.

People shaped by the problems

Alignment is no longer just a machine learning problem. It’s an incentive problem, an evaluation problem, and a systems problem. Observing behaviour in dynamic environments requires instincts honed in designing systems where behaviour emerges under pressure.

That is the terrain founders Austin McCaffrey and Coleman Maher come from. Their backgrounds in decentralised infrastructure center on environments where incentives shape behaviour continuously and stability is stress-tested through interaction. What matters isn’t what a system claims in isolation, but how it behaves over time and through constant change.

That worldview maps directly onto agentic alignment, which Aurelius treats as a systems phenomenon that must be surfaced through decentralised feedback and sustained interaction.

Making that viable, however, requires three pillars of expertise: incentive design to surface behaviour, evaluation discipline to separate signal from performance, and ethical reasoning to interpret decisions under pressure. Together, they form the operating structure that allows Aurelius to observe alignment as a living system rather than a static claim.

Pillar I: Incentivised to incentivise

Misalignment often emerges when incentives pull behaviour in directions designers didn’t anticipate. Once agents interact, behavior becomes a function of coordination, not isolated outputs.

This is the logic Coleman and Austin bring from decentralised systems, where stability emerges through interaction and feedback. Aurelius applies that logic directly: agents are evaluated inside decentralised feedback loops where behaviour is stress-tested as incentives evolve.

The challenging task of designing incentives is led by core members of the team including Austin, Friedrich (CTO) and an expert advisor Steffen Cruz (Macrocosmos). Steffen’s work across Opentensor and Bittensor subnets reflects deep familiarity with adversarial dynamics as a proving ground for resilient behaviour. Friedrich’s distributed systems background adds architectural discipline, ensuring incentive-driven evaluation remains coherent at scale.

Decentralised systems offer a unique advantage here: disagreement produces signal. When multiple evaluators operate under competing incentives, behavioural inconsistencies surface naturally. Rather than suppressing variance, Aurelius uses it as an instrument, turning decentralised interaction into a continuous test of structural coherence.

Getting incentives right isn’t a one-time calibration – it’s an ongoing evolutionary process. But it’s the first step, and it sits at the centre of Aurelius’ architecture, because alignment can only be surfaced reliably when the system rewards the behaviours it’s designed to observe.

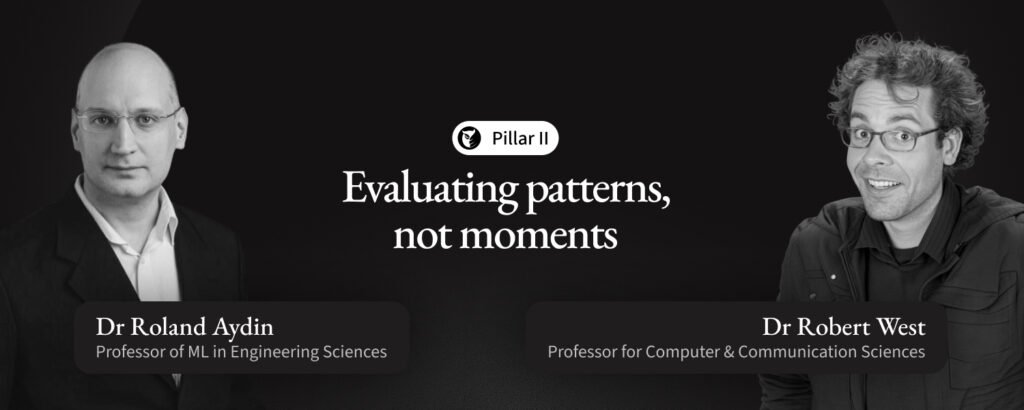

Pillar II: Evaluating patterns, not moments

If incentives reveal behaviour, evaluation determines whether you’re actually seeing it. Alignment failures surface as drift and emergent patterns over time. Snapshot alignment evaluations only reveal a single cross-section of behavior at a given point in time, but sustained evaluation separates surface compliance from behavioural continuity.

This discipline mirrors the founders’ systems instincts: behaviour must remain coherent as incentives evolve. Aurelius operationalises that mindset through deterministic scoring, reasoning-focused frameworks, and longitudinal interaction as the primary unit of analysis.

The behaviours surfaced through decentralised testing are captured as structured alignment data – turning observed alignment states into verifiable training signals that can inform safer system development.

The importance of getting evaluation right is why Aurelius draws on research advisors like Dr Robert West and Dr Roland Aydin. Their work in AI systems and machine learning architecture centres on separating durable behavioural signal from surface outputs – precisely the challenge alignment now presents. AE Studio’s alignment research reinforces the same instinct: behaviour must be observed deeply and over time if it is to be understood at all.

This is a demanding problem, requiring the separation of signal from performance in systems in constant motion. Yet that’s precisely the complex terrain this team is built to navigate. Aurelius meets that challenge head-on, turning evaluation into infrastructure capable of evolving alongside the systems it observes.

Pillar III: Ethics under pressure

Alignment isn’t just behavioural – it’s interpretive. In dynamic systems, ethics reveals how decisions persist when incentives collide. That’s why ethics and moral frameworks are invaluable analytical lenses for observing decision formation under pressure, and why the founders deliberately embedded ethical reasoning into Aurelius’ architecture.

For this, Aurelius draws on advisors Jack Hoban and Ryón Nixon. Hoban’s Ethical Warrior framework treats decision-making as structured reasoning under pressure – precisely the landscape dynamic AI systems now inhabit. Nixon’s legal and governance background reinforces the same principle: alignment operates within real-world constraints that shape behaviour, not abstract ideals.

Ethical interpretation at this level is inherently complex, requiring fluency in competing values and structural pressures. Aurelius treats it as a structural component of alignment observation, ensuring behaviour is not only surfaced and measured, but meaningfully understood as systems evolve.

Convergence, not invention

Taken together, these pillars describe convergence. Incentives surface behaviour. Evaluation reveals patterns. Ethics interprets decisions. A model’s alignment priors become observable only when these layers operate together.

The challenge Aurelius faces is only intensifying. AI systems and agentic environments are evolving faster, interacting more deeply, and generating more inscrutable behaviours, resulting in growing consequences – financial, infrastructural, and social. Meeting that reality requires an architecture capable of evolving alongside the behaviour it observes, and tracking instability before it becomes impact.

The people building Aurelius arrived from different directions but converged on the same conclusion: alignment is an emergent signal that must be observed honestly, tracked over time and addressed persistently.

A decentralised evaluation subnet is not a speculative idea inside that worldview. It is the architecture implied by the problem. Aurelius is simply where those lines met.

Be the first to comment