Artificial intelligence has become the defining technology of our time, yet most people remain shut out from contributing to its creation. Training frontier models typically requires massive data centers, expensive hardware, and tightly controlled environments. Macrocosmos, led by CEO (Chief Executive Officer) Will Squires and CTO (Chief Technology Officer) Steffen Cruz, wants to change that.

At an hybrid event streamed online and held live on-location in London, the team unveiled Training at Home, a simple desktop app that allows anyone with a MacBook to contribute compute power to the training of large language models and earn rewards for doing so. This is their first public demonstration of a system that aims to democratize access to AI development at a global scale.

Why AI Training Needs a Rethink

Will opened the session by emphasizing that AI training has become so compute-intensive that the cost rivals the largest industrial build outs in history. Forecasts suggest trillions of dollars in spending this decade, split between chips and physical infrastructure.

Traditional training relies on:

a. Huge, centralized GPU (Graphics Processing Unit) clusters

b. Different machines marching in sync like a single giant organism

c. Enormous capital expenditure

d. High barriers to entry for researchers, developers, and everyday people

The result is a closed ecosystem where only a few institutions can meaningfully participate in building the next generation of models.

Macrocosmos believes this future is unsustainable. Their question became: what happens if we spread the work across the world’s unused compute, instead of locking everything inside data centers?

The Vision Behind Training at Home

Macrocosmos has spent two years exploring distributed training on Bittensor, gradually pushing the boundaries of what community powered compute can accomplish.

Their belief is straightforward:

a. Millions of people have unused compute sitting idle.

b. Modern machines, especially MacBooks, provide more power than most realize.

c. The long tail of global compute resources is enormous.

d. If coordinated properly, this network can rival centralized systems.

Training at Home is the first large scale step toward that vision.

What Training at Home Actually Offers

Training at Home is a small desktop application that turns an ordinary MacBook into a node that contributes to the training of a large AI model. You open the app, click a single button, and your machine joins a global cluster.

1. For Users, IOTA’s Training at Home offers:

a. Passive Income: Earn Subnet 09 ‘$ALPHA’ tokens for contributing compute.

b. No Expertise Required: The system handles everything ‘technical’ automatically.

c. Flexible Participation: Users can choose to turn it on when they want, and turn it off when they need their laptop.

d. A Shared Ownership Model: Contributors receive a share of the value created by the model they help train.

2. For Macrocosmos, they offer:

a. Access to a massive, global pool of distributed compute

b. Lower training costs

c. A more resilient system not limited by a single data center

d. A pathway to training frontier-scale models in the future

The app is intentionally simple, hiding complex coordination behind an accessible interface. Users can even track how much they earn and how much they have contributed to the total training process.

Why Distributed Training Works Now

Distributed AI training is not a new idea. Many teams have attempted it over the last decade, but real world versions were slow, unstable, or too inefficient.

Macrocosmos identified four breakthroughs that now make it viable:

1. A Global Network of Contributors: Millions of potential nodes decentralize the load, similar to how Bitcoin aggregated the largest compute network on earth by rewarding participation.

2. A System that Tolerates Real-World Conditions: People close laptops, lose connection, move between networks. Training at Home is designed to handle unreliable nodes instead of breaking when a single device drops out.

3. A Way to Organize Compute at Scale: IOTA coordinates thousands of devices so they can work together on a single model, rather than duplicating work and wasting energy.

4. A Secure and Trustless Validation Layer; To ensure contributors don’t cheat, the system checks that every device is running the correct software, verifies that the work submitted is valid, and pays participants automatically based on actual contribution

This makes the process both open and reliable.

Who Benefits From This Approach

Everyone in the ecosystem stands a chance to massively benefit from this state-of-the-art infrastructure:

a. Everyday Users: Anyone with a MacBook can now earn rewards, contribute to model training, and be part of a global AI initiative without needing specialized hardware.

b. AI Researchers: The system creates a large pool of compute that is flexible, affordable, and accessible, opening opportunities that would otherwise require expensive cloud budgets.

c. The Bittensor Ecosystem: Training at Home is the first Bittensor subnet that allows non technical users to participate directly in mining through a simple interface.

d. The Broader AI Community: As compute costs rise, decentralized infrastructure becomes an essential alternative to traditional cloud providers.

Why This Launch Matters

This launch represents more than a new product, it is a proof of concept that:

a. Large scale AI-training can be democratized

b. Distributed systems can challenge centralized GPU farms

c. Consumer hardware can meaningfully contribute to frontier research

d. Participation in building AI can shift from a small elite to a global community

Several senior engineers from Google and OpenAI have already seen the system and were stunned by the speed of progress. What began as an experiment in May has evolved into a functioning mainnet app by year end.

What’s Next for Training at Home

From here, Macrocosmos aims to:

a. Expand support beyond MacBooks to additional hardware

b. Increase scale from hundreds of nodes to tens of thousands

c. Improve performance until it rivals or surpasses traditional training environments

d. Develop a marketplace for compute that adjusts pricing based on supply and demand

e. Explore long form inference and deep research workloads

f. Build toward training trillion parameter models using a global distributed cluster

This is still early, but the trajectory is clear: decentralized training is no longer theoretical.

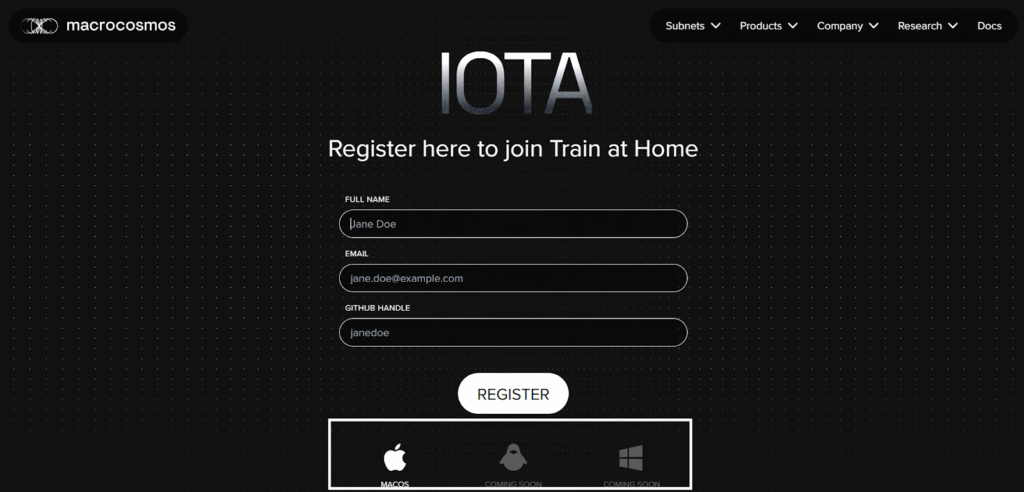

How to Get Involved

Macrocosmos is opening access gradually as they scale capacity.

Here is how anyone can participate:

a. Join the waiting list for Training at Home

b. Prepare a MacBook to contribute when approved

c. Follow updates from Macrocosmos and IOTA (Subnet 09)

d. Contributors can also share feedback once they begin running the client

e. Invite others who want to participate in decentralized training

The long term vision is simply to empower millions of people who would champion the next generation of AI together.

Be the first to comment