The Bittensor ($TAO) ecosystem has evolved into a marketplace of subnets, where each subnet competes for daily emissions based on actual demand, usage, TAO inflows (Taoflow), and real-world utility.

After the 2025 halving cut emissions from 7,200 TAO/day to 3,600 TAO/day, the subnets earning the biggest share aren’t there by luck; they’re there because people are actively betting on them.

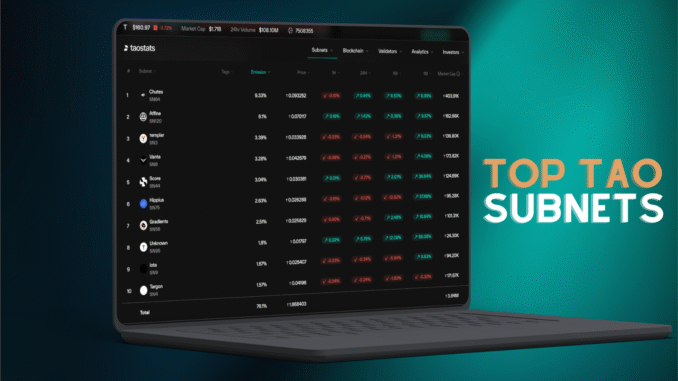

Below are the top 7 subnets by emissions (per taostats.io) and why they’re dominating.

1) Chutes (SN64): The Serverless GPU Engine of Bittensor

If there’s one subnet that feels like “Bittensor’s AWS,” it’s Chutes.

Chutes offers serverless GPU compute that lets developers run AI workloads (LLMs, image generation, video inference, etc.) without touching infrastructure. You plug into an API and ship.

What makes it stand out:

- Reportedly ~85% cheaper than centralized providers

- Fast startup times (~200ms)

- Supported by 8,000+ GPU nodes globally

- Handles millions of requests daily

- Runs models from DeepSeek to GPT-4

- Uses revenue for auto-staking + alpha token buybacks

Its emission share (around 9.33%) makes sense: it’s one of the few subnets with clear revenue + heavy real usage.

2) Affine (SN120): The Infrastructure Glue for Interoperable AI

Affine is less flashy, but arguably more important. It functions like an ecosystem backbone, enabling coordination between subnets, better evaluation pipelines, and scalable deployment workflows. As more subnets launch, interoperability becomes the difference between “a bunch of isolated projects” and “a real AI network.”

Why it’s high-emission (~6.1%):

- It’s positioned as a reinforcement learning + benchmarking bridge

- It supports cross-subnet model evaluation

- It reduces ecosystem fragmentation (huge long-term advantage)

Also worth mentioning is that Const himself is one of the core contributors to the subnet.

3) Templar (SN3): Decentralized Training at Serious Scale

Templar is pushing one of the hardest problems in AI: training large models in a decentralized way.

It’s focused on pre-training and fine-tuning at a massive scale, including models reaching 70B+ parameters.

What keeps Templar relevant:

- It’s considered one of the largest active decentralized training networks

- It’s linked into a wider training stack with subnets like Basilica (compute) and Grail (RL post-training)

- It has gained major attention in the ecosystem due to its ambition and results

Templar is about proving decentralized training is possible.

Current emission share: ~3.39%.

4) Vanta (SN8): Bittensor’s Decentralized Prop Trading Firm

Vanta is basically the subnet that turned Bittensor into a performance-driven trading competition. It crowdsources risk-adjusted strategies from miners and applies them to markets like:

- crypto (BTC/ETH)

- forex

- indices

Why it stays near the top (~3.28% emissions):

- outputs are directly monetizable

- miners are competing on measurable performance

- financial incentives naturally attract participation

- revolutionizing prop trading

Whether you like the “AI trading” narrative or not, Vanta is one of the most commercially obvious subnets in the entire network.

5) Score (SN44): Sports Analytics Meets Computer Vision

Score is building a computer vision subnet focused on football (one of the focuses), and that’s a massive market.

The goal is to extract match-level intelligence from videos (player movement, events, patterns, stats) and turn that into usable datasets for prediction, betting, and AI training.

Why it matters:

- football is a $600B+ global industry

- vision tasks can scale massively with decentralized miners

- claims of up to 100% accuracy in tests (70% average)

It’s currently earning around 3.04% emissions, and as it locks in real partnerships, this could become one of the most valuable niche subnets in Bittensor.

6) Hippius (SN75): Decentralized Storage for the Entire Network

Every AI ecosystem eventually hits the same wall: storage.

Hippius is positioning itself as Bittensor’s decentralized storage layer. It’s essentially the “hard drive subnet” for files, models, datasets, and infrastructure components.

Key highlights:

- decentralized cloud storage with enterprise-grade reliability

- powered by the Arion Protocol

- positioned as cheaper and faster than centralized alternatives (like S3)

- integrates into other subnets (including infra-heavy ones like Affine)

Emission share: ~2.9%.

If Bittensor ever reaches 1,000+ subnets, storage becomes non-negotiable, and Hippius becomes even harder to replace.

7) Gradients (SN56): Competitive AI Training for Everyone

Gradients is one of the most user-friendly subnets in Bittensor. They make model training accessible, cheaper, and competitive.

It supports multiple training workflows, including:

- supervised learning

- RLHF-style approaches

- distributed training competitions

Its edge:

- reportedly cuts training costs by ~70%

- open-source tournaments drive rapid improvement

- produced models like Gradients Instruct 8B

- strong market traction and token performance

Current emission share: ~2.51%.

Gradients is what happens when you take the “AI tournament” concept seriously and scale it into a real product.

Be the first to comment