Templar, Subnet 3 on Bittensor, has officially launched the first-ever decentralized training run of a 70 billion parameter AI model, a milestone that redefines what is possible outside the walls of Big Tech.

Templar is a decentralized AI training system that connects computers worldwide to collaboratively train models. It rewards contributors for providing computing power and quality data while ensuring trust and accuracy.

By using blockchain, Templar leverages decentralization to create an open, secure, and cost-effective way to build AI, without relying on central servers or big tech companies. This approach promotes scalable, community-driven, and privacy-focused AI development.

A Shift in the AI Landscape

Traditionally, training models of this size has been the exclusive domain of companies with near-limitless resources — OpenAI, Meta, and Google — who spend billions on specialized data centers. Templar’s launch proves otherwise: distributed miners, connected only through the internet, can coordinate at a frontier scale.

This marks the first credible alternative to centralized AI development, showing that advanced models can be trained without monopolized infrastructure.

Key Innovations Powering the Run

Templar’s breakthrough was enabled by several innovations designed specifically for decentralized environments:

a. SparseLoco Optimizer: Lowers communication needs between miners, solving the bandwidth bottleneck of traditional distributed training.

b. Performance-Based Incentives: Validators measure how much each miner improves the model. Miners are rewarded only when their contributions make the model better, creating economic alignment.

c. Gradient Compression: Using Discrete Cosine Transform (DCT) and top-k selection, Templar cuts data transfer needs by orders of magnitude, allowing effective coordination over normal internet bandwidth.

d. Checkpointing: Frequent progress saves prevent disruptions from miner failures.

These systems allow a heterogeneous, global network to operate as if it were a high-end centralized cluster.

Training at Enterprise Scale

The run spans 1.5 trillion tokens, split into 14 shards of 107 billion tokens each. Every shard requires 400GB of storage — putting Templar’s infrastructure in the same class as an enterprise-grade AI lab.

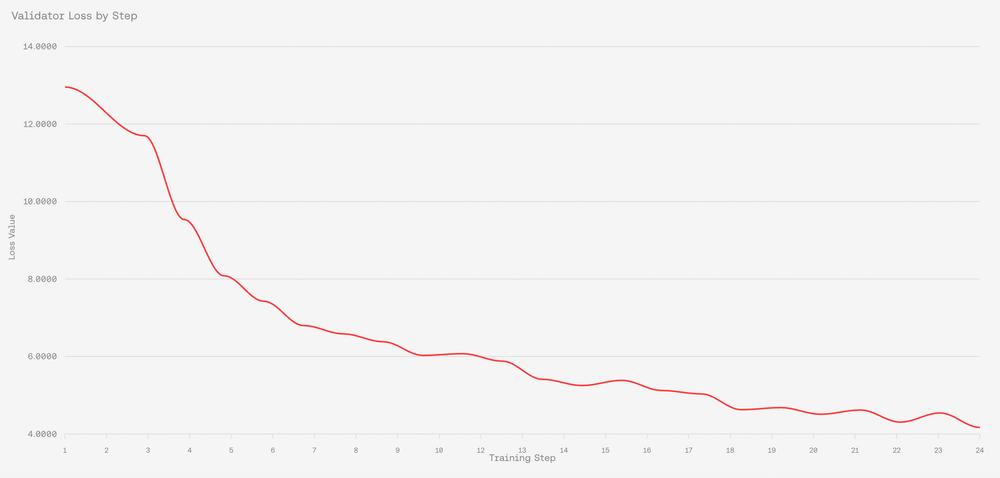

Already, results are showing. The validation metric (Bits per Byte, or BpB) has dropped from 13 to 4.2 over the first 24 steps, confirming both technical progress and incentive alignment.

Why It Matters

Centralized AI development carries risks — single points of failure, political interference, and monopolistic control. Templar’s success demonstrates that:

a. Coordination without corporations is possible.

b. Frontier AI solutions can be built openly and permissionlessly.

c. Barriers to entry for advanced AI are falling.

The comparison is direct — where OpenAI and Meta rely on billion-dollar clusters, Templar relies on decentralized participation and incentives.

Looking Ahead

If the 70B model reaches competitive performance, it will mark a turning point — the same way Bitcoin once proved digital money could work without central banks.

Decentralized AI is no longer a concept. It’s here, and it is scaling.

Resources

Check out Templar via the official sources below:

Website: https://www.tplr.ai

X (Formerly Twitter): https://x.com/tplr_ai

GitHub: https://github.com/tplr-ai/templar

Discord: https://discord.gg/N5xgygBJ9r

Docs: https://docs.tplr.ai

Be the first to comment