For a long time, “open-source AI” has meant something very specific. The weights are public, but everything that matters happens elsewhere. Training decisions, data pipelines, and refinement processes remain locked inside centralized teams and private infrastructure.

Covenant72B challenges that model. Through a coordinated but fully decentralized collaboration between Templar (Subnet 3) and Gradients (Subnet 56), the Bittensor ecosystem has produced a Large-Language Model trained across multiple subnets, without central control, and with each stage of the pipeline handled by a specialist network.

With Gradients on board, its post-training work has turned a raw decentralized model into a system designed for real use.

From Raw Model to Usable Intelligence

Covenant72B began its life on Templar (Subnet 3), where permissionless miners handled pre-training at scale. That phase produced a 72 billion parameter foundation model, trained entirely on decentralized infrastructure.

From there, Gradients took over.

Rather than retraining from scratch, Gradients ingested published checkpoints and applied its post-training stack, focusing on alignment, conversational behavior, and practical deployment.

This handoff is what makes the process notable. Two independent teams, operating separate subnets, coordinated purely through open interfaces. No private agreements, no centralized oversight, but pure composability.

What Gradients Changed

Post training was not incremental, it was transformational. After Gradients’ pipeline was applied, the model demonstrated:

a. A sharp reduction in evaluation loss, falling from 3.61 to 0.766

b. Instruction following and multi-turn conversational ability

c. Early reasoning behaviors emerging from aligned training

d. A structured chat format suitable for deployment

e. A context window expanded from 2k to 32k tokens via yarn extension

These improvements shifted Covenant72B from a research artifact into a usable conversational system, showing what decentralized post training can achieve when done intentionally.

Why Post Training is the Real Bottleneck

Much of the AI conversation focuses on pre-training scale, more data, bigger models, and more compute.

But in practice, post training is where models become useful. Instruction tuning, conversational alignment, reasoning behavior, and context handling determine whether a model can function outside a lab environment. This is where Gradients’ role becomes critical.

By providing a reusable, automated post training pipeline, Gradients lowers the barrier for decentralized models to reach real world usability. More importantly, it allows that process to remain open, inspectable, and composable.

This is the difference between open weights and open systems.

Where the Model Stands Today

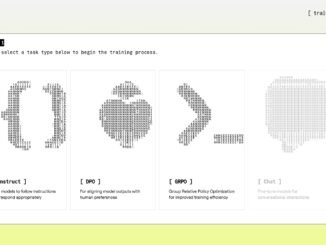

Covenant72B is not presented as a finished product, pre-training continues on Templar. Further alignment techniques, including DPO (Direct Preference Optimization), are planned. Performance will improve iteratively.

What has already been proven is the architecture:

a. Large scale pre training can happen on permissionless infrastructure,

b. Specialized subnets can refine models without central coordination,

c. Post training pipelines can be reused across models, and

d. Open collaboration produces compounding improvements.

The system works, and it works in the open.

A Template for the Ecosystem

This collaboration sets a pattern other subnets can follow.

Build one piece of the training stack: Publish it openly, allow others to compose, and let value accumulate through specialization rather than duplication.

Gradients is establishing itself as a foundational post training layer within that system. As additional subnets contribute reinforcement learning, domain tuning, or evaluation frameworks, decentralized training becomes not just viable, but competitive.

Looking Ahead

The long-term ambition is to create a frontier class language model trained entirely through decentralized infrastructure, with transparency at every layer.

Covenant72B is not that end state, it is a concrete step toward it. By proving that post training can be decentralized, automated, and effective, Gradients has helped move open AI beyond slogans and into execution.

Decentralized AI does not arrive all at once, it compounds through working systems and Covenant72B shows that compounding has already begun.

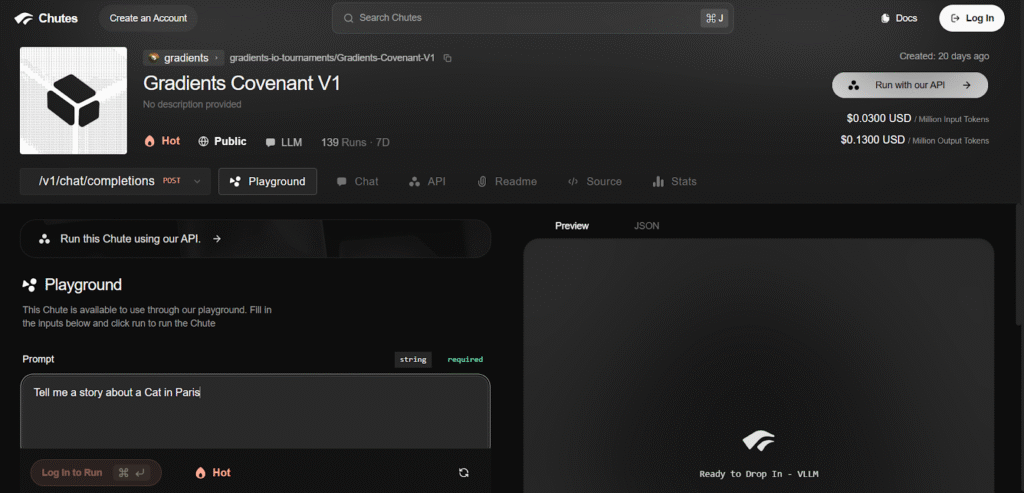

Access the Model

The Gradients Covenant v1 pre-trained model is publicly accessible on Chutes (Subnet 64).

Be the first to comment