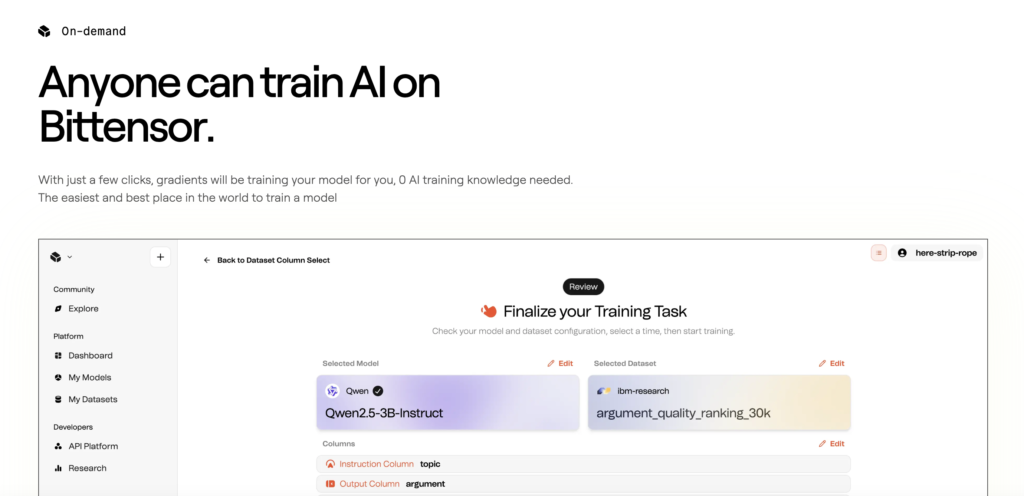

The barrier to training and fine-tuning machine learning models has always been high. You’d typically need to understand Python, wrangle datasets, and tinker with complex training scripts just to get a model to do what you want. But with Gradients (Bittensor subnet 56), that story is changing.

Gradients now offers three powerful types of text model training/fine-tuning—all in a no-code, 0-click platform. With just a few selections, anyone can train or align a model, making machine learning accessible to a much wider audience.

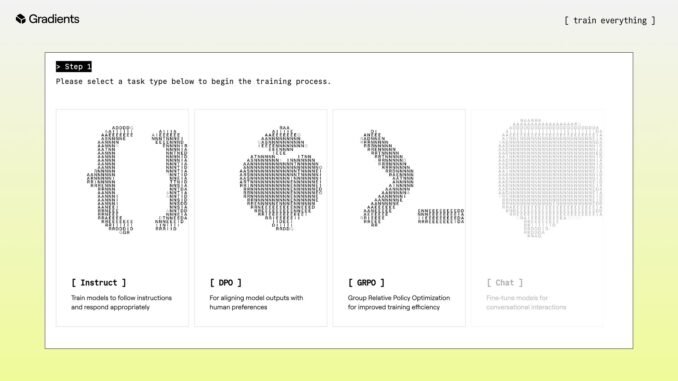

Here’s a quick breakdown of the options and when to use them:

1. Instruct Training

Train models to follow instructions and respond appropriately.

- When to use: If you need a model for clear, task-oriented actions—like summarization, Q&A, translation, or coding assistance—this is the right choice. Instruct training ensures the model performs well without changing its underlying style or preferences.

2. DPO (Direct Preference Optimization)

Align model outputs with human preferences.

- When to use: If you have preference data (for example, comparisons between two answers) and want to improve tone, quality, or alignment with human judgment, DPO is the way to go. Think of it as teaching the model to pick answers people like more.

3. GRPO (Group Relative Policy Optimization)

Boosts training efficiency and stability with group feedback.

- When to use: Perfect for tasks where multiple outputs need ranking or evaluation—like search results, recommendation systems, or creative sampling. GRPO makes learning more stable than using single outputs and rewards.

Zero-Click Training: How It Works

The beauty of Gradients is simplicity. You don’t need to write a single line of code. Here’s how it works:

- Bring your dataset (or choose one from Gradients).

- Select your model.

- Assign your dataset columns.

- Pick your training option (Instruct, DPO, GRPO).

- Launch.

That’s it—your fine-tuning kicks off in seconds.

Why This Matters

By making machine learning training accessible through clicks instead of code, TAO and Gradients are removing the bottleneck of technical expertise. Developers, researchers, or even complete beginners can experiment with cutting-edge AI training methods without worrying about infrastructure or algorithms.

The result? A new wave of builders empowered to push the limits of AI—because now, anyone can be an ML engineer.

👉 Start here: gradients.io

Be the first to comment