Contributor: Crypto Pilote

Cheaters will be defeated!

Not all neurons in Bittensor play fair. Some attempt to game the system, but the network has been carefully designed to detect and penalize such behavior, ensuring the integrity of the protocol.

This mechanism is crucial because it maintains the reliability of both synthetic and organic data flowing through the network. Even when periods are quiet and no customer requests are active, the protocol has ways to keep the system robust and prevent exploitation.

In this article, we’ll break down how Bittensor safeguards against dishonest neurons, how it preserves network integrity, and what happens behind the scenes during idle periods.

I – Synthetic data / Organic data

Before diving into how miners and validators might cheat, it’s important to first understand how rewards are allocated at each epoch.

Have you ever wondered what happens in a subnet when there are no customers requesting work?

That’s an aspect we rarely discuss in Bittensor because it mainly concerns validators and miners, yet it’s full of valuable insights for anyone looking at subnets.

Think of it like a shop with no visitors. Do miners just sit and wait for customers to show up? Of course not. That would mean being paid for doing nothing, and Bittensor’s design is far too clever for that. Just like in a real shop, there’s always something to do to stay ready for when real customers arrive.

As mentioned, miners must provide work and validators must verify it. Every 360 blocks (≈ 1h12), everyone is scored. So what happens when nobody needs the service?

The network uses synthetic requests (in opposition to organic requests from real customers).

When the subnet is quiet, miners train on invented requests generated by validators. That process brings several benefits:

- Continuous model improvement

Miners keep improving their models to achieve better results. They can tune their models to match the subnet’s objectives and ensure repeatability, which helps secure a larger share of emissions and remain profitable. - Scoring miners

Validators rank miners based on their outputs. They can adjust difficulty if needed to raise the quality of the service the subnet provides. This creates a virtuous cycle: top-performing subnets earn more rewards, attract more miners, and increase competition and overall quality. - Fine‑tuning the scoring system

The sorting method can evolve over time and be updated to fit the SN owner’s goals. Two common approaches are winner‑take‑all (where the top miner takes the whole reward, like Ridges) and weighted rewards (where payouts decrease progressively from the top). - Proofs of concept and benchmarks (to attract customers)

Once adjustments are made and the system performs well, those results can be published as proof of concept. Demonstrated benchmarks (e.g., from Ridges or Zeus) help convince customers, neurons, and potential collaborators to join the subnet, increasing its value and competitiveness.

Sounds easy, right? Let’s now look at how this process can be exploited or gamed.

II – Validators / Minors: a cat and mouse game

The validator’s role is to issue tasks to miners and evaluate their performance.

The miners’ objective is to meet the validator’s expectations but they’re not doing it for free. To stay profitable, their underlying goal is to earn the maximum reward with the least possible effort.

Instead of striving to be the best, some miners take a different path: cheating.

But validators aren’t naive either. Here are some common techniques and how they’re countered.

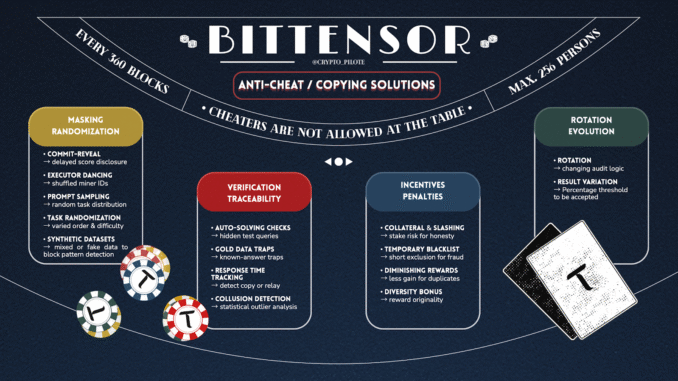

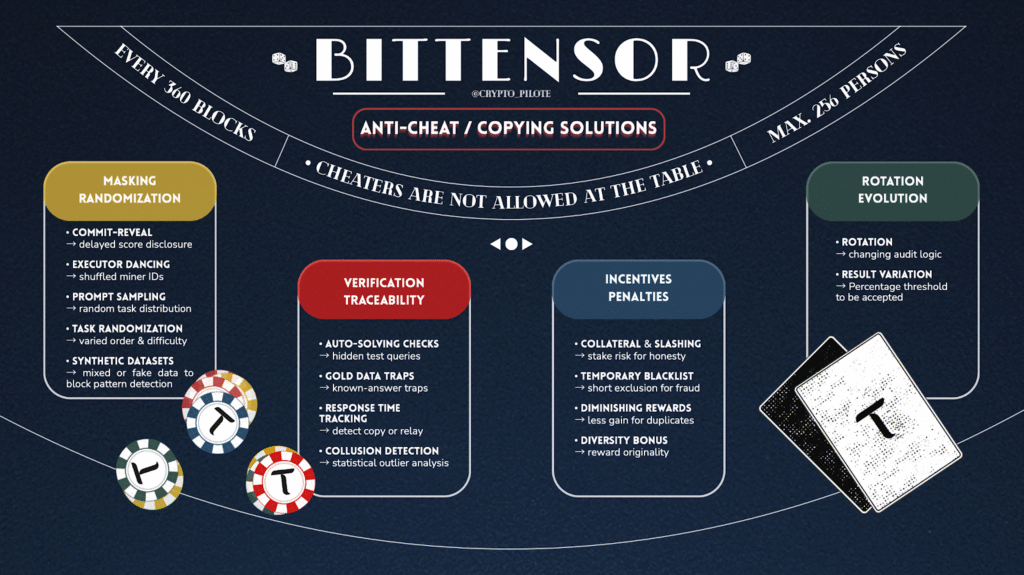

a) Masking and Randomization

Prevent malicious actors from identifying and copying high-performing behaviors.

Commit-Reveal:

Validators score miners, but the results (or “weights”) are only revealed in the next epoch.

→ This prevents validators from copying each other’s evaluations before the period ends.

Executor Dancing:

Miner identifiers are regularly shuffled.

→ This makes it harder to track which miners perform best, reducing the risk of copying or collusion.

Prompt Sampling (Aurelius):

Not all miners receive the same requests — each gets a random sample.

→ This prevents “cherry-picking,” meaning miners can’t just focus on the easiest or most profitable queries.

Task Randomization:

The order, content, or difficulty of requests may vary between miners.

→ This introduces “noise” and helps detect those submitting copied responses.

Synthetic / Mixed Datasets (Gradient):

Some datasets are mixed or partially synthetic to prevent miners from simply recognizing them.

→ This ensures miners actually solve the task rather than using memorized answers.

b) Verification and Traceability

Ensure that work is genuinely performed by the miner and not copied from others.

Auto-solving Check (Gradient):

The subnet occasionally injects queries with known answers (“synthetic tasks”).

→ If a miner claims a high performance but fails on these hidden tests, their score automatically drops.

“Gold Data” Traps:

Validators send trap queries with known answers.

→ If a miner copies another’s response (who didn’t receive that trap), the fraud is immediately revealed.

Response Time Measurement:

Validators track how long miners take to respond.

→ Abnormally fast or slow replies can indicate copying, automation, or relaying.

Collusion Detection (Aurelius):

Statistical correlation between a validator and a miner can expose collusion — for instance, if one validator consistently gives abnormally high scores to a specific miner.

c) Incentives and Penalties

Reward honest behavior and punish cheating.

Collateral & Slashing:

Miners must deposit collateral to earn a yield bonus.

→ If they cheat, they risk losing this deposit (“slashing”), which strongly discourages dishonesty.

Temporary Blacklist:

A miner suspected of fraud can be temporarily excluded from participation.

→ This prevents short-term exploitative behavior.

Diminishing Rewards:

If a miner submits the same prediction twice or copies another’s output, the second one earns less.

→ Encourages diversity and genuine contribution.

Diversity Bonus:

In creative subnets (e.g. text or design), original responses earn a reward bonus.

→ Incentivizes miners to produce unique outputs rather than copying others.

d) Rotation and Continuous Evolution

Prevent cheaters from adapting to fixed rules.

Tribunate Rotation (Aurelius):

Evaluation criteria and audit logic are regularly rotated.

→ Even if someone figures out how to “game” the system, the rules will soon change.

Code Variation Requirement (Ridges):

Miners must prove at least a 1.5% change in their code between two versions.

→ Encourages continuous innovation and prevents static setups from exploiting loopholes.

These strategies are adaptive by design and illustrate how subnets can enforce honest behavior among miners.

III – Deregistration update: excluding bad players

All these mechanisms align with the core spirit of the network: competition and continuous improvement.

The entire system is designed to reward the best subnets (and, indirectly, their miners) while reducing rewards for underperforming ones.

Until recently, even the weakest and inactive subnets were still earning rewards. This was unfair to the rest of the network and distorted the ecosystem by allowing extraction subnets with no real value to profit.

To address this and discourage bad actors, the deregistration mechanism was introduced.

This means extraction subnets can no longer remain idle; if they fail to contribute, they’ll be seized and put back up for sale. In mid-October 2025, the first case of this kind occurred. SN100 was the one to make history.

Times are getting tougher for cheaters. Across every layer of the network, from individual neurons to entire subnets, dishonest players are being phased out, strengthening Bittensor and pushing the boundaries of intelligence through decentralized competition.

Be the first to comment