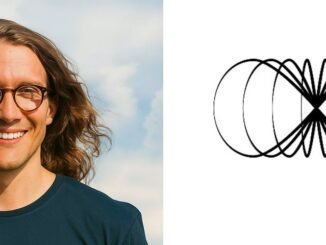

Macrocosmos, one of the most prominent development teams in the Bittensor ecosystem, has unveiled “Training at Home,” a MacBook application designed to let ordinary users contribute compute power to decentralized AI training. The launch marks a significant milestone for Bittensor’s push toward democratized, community-driven machine learning.

The new application is positioned as an accessibility-first tool, requiring no coding, no infrastructure setup, and no specialized hardware beyond a standard MacBook. Users simply install the app and begin contributing to AI model training with a few clicks, effectively turning laptops into micro training nodes.

“Training at Home” integrates directly with Bittensor’s Subnet 9 (IOTA), a subnet focused on distributed pre-training of large language models.

The application advances IOTA’s vision of scaling decentralized training to levels competitive with centralized cloud providers. By tapping into edge compute from users worldwide, the system aims to build a global, community-powered AI engine. Participants will earn $TAO rewards for compute contributions, reflecting Bittensor’s incentive structure.

Some questions remain around MacBook exclusivity, expected earning rates, and future support for non-Apple devices.

In a space dominated by centralized AI giants, “Training at Home” represents a shift toward decentralized ownership of compute and model development, powered by millions of consumer devices rather than large data centers. The team encourages users to join the movement and “contribute, compute, own the future.”

Join The Waitlist here.

As we await the official launch of “Training at Home”, you can follow Macrocosmos’ official channels or TAO Daily for the latest news.

Be the first to comment