While most AI focuses on text and images, Kinitro is tackling something harder: teaching AI to control physical robots. Operating as Subnet 26 on Bittensor, Kinitro runs competitions where AI agents compete to solve real-world robotics tasks. The better your AI performs, the more TAO tokens you earn.

This isn’t theoretical AI research. Kinitro uses simulated environments that mirror real robotics challenges. A robot arm needs to pick up and manipulate objects. A drone needs to navigate and deliver payloads. These are tasks that matter for actual robots that will work in homes and businesses.

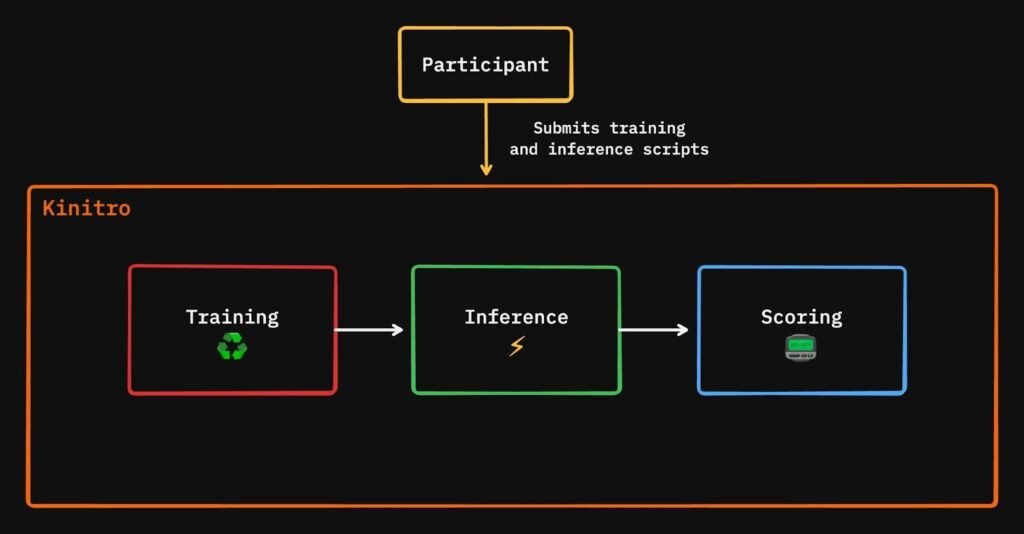

How Kinitro Works

The concept is straightforward: competitions where AI agents compete to complete tasks, with winners earning rewards.

Kinitro sets up challenges in simulated environments. These might include robot arms performing manipulation tasks, drones navigating spaces, or other scenarios involving embodied AI. Each challenge has clear metrics, which are usually success rates that measure how often the AI completes the task correctly.

Miners develop AI agents to compete in these challenges. They train their models, test them, and submit them through Kinitro’s system. Validators run the AI agents through the simulated tasks, measuring performance objectively. The AI that performs best earns the highest rewards in TAO tokens.

Everything happens transparently. All evaluations stream in real-time to a public dashboard at kinitro.ai/dashboard. Anyone can watch AI agents competing and see which approaches work best. This openness accelerates learning across the whole community. Because when someone discovers a technique that works, others can observe and build on it.

Real Progress on Hard Problems

Kinitro’s competitions are producing measurable results. In the Multi-Task Robot Arm challenge using the Metaworld MT10 environment, miners have achieved 83% success rates as of December 2025. That means AI agents successfully complete complex manipulation tasks more than 8 out of 10 times.

For drone navigation and payload delivery, progress came even faster. Participants solved the navigation challenge in just one day after it launched. This shows how competition and incentives can rapidly push performance when the problem is clearly defined.

The subnet is moving toward more complex challenges. V0.2, planned for February 2026, will introduce training-based evaluations. Instead of just submitting pre-trained models, miners will be able to show their full training process on curated datasets. This opens up harder problems that require longer learning periods.

Kinitro uses standard environments like Gymnasium, which means the skills AI agents develop here can transfer to real robotics applications. The simulations are designed to be realistic enough that success in simulation predicts success on actual hardware.

Why Competition Accelerates Development

The traditional way to develop robotics AI is slow: a company hires researchers, gives them a problem, and waits months or years for solutions. Kinitro does it differently.

By making challenges public and incentivizing participation with TAO rewards, Kinitro taps into a global pool of talent. Anyone with skills in reinforcement learning and AI can compete. The best solutions rise to the top naturally through objective performance metrics.

This creates several advantages. Development costs are radically lower, so there’s no need to hire large teams when miners worldwide contribute solutions. Progress is faster because many approaches get tested simultaneously rather than sequentially. The best techniques become publicly visible, so everyone learns from what works.

For companies or research labs with specific robotics problems, Kinitro offers custom environment creation. They can define their exact challenge, set up the evaluation criteria, and let the decentralized network compete to solve it. The subnet handles all the infrastructure for running competitions and distributing rewards.

The Technology Behind It

Kinitro is built on solid technical foundations that make the competitions reliable and verifiable.

The backend uses FastAPI for handling submissions and scoring, with PostgreSQL storing all the evaluation data. Ray handles parallel execution of simulations, allowing many AI agents to be tested simultaneously. Everything runs in Docker containers for consistency; the same simulation environment that miners test locally is what validators use for official evaluations.

The integration with Bittensor handles rewards distribution. When validators score an AI agent’s performance, that data goes on-chain. Top performers earn TAO emissions automatically based on their position on the leaderboard. The whole process is transparent and verifiable.

Miners can develop and test locally before submitting. The code is open-source on GitHub, so anyone can set up the evaluation environment on their own machine. This means you can iterate quickly without using network resources until you have something worth submitting officially.

From Storage to Robotics

Kinitro has an interesting history. It started as Storb, a decentralized storage subnet. In mid-2025, the team behind Threetau acquired it and rebranded it to focus on embodied intelligence (AI that controls physical systems).

This pivot made sense. Bittensor already had several subnets working on storage, but embodied AI was underserved. The robotics and physical AI market is enormous and growing fast, with humanoid robots and drones becoming commercially viable. Kinitro positioned itself to capture that opportunity.

The Threetau team brought experience in decentralized AI projects and rebuilt the subnet infrastructure specifically for robotics challenges. They recently secured a 50 TAO funding deal with const_reborn to accelerate development, showing confidence from Bittensor community members in the direction.

What Makes This Valuable

Kinitro matters because it’s solving a specific, high-value problem: how to develop AI that can control physical systems reliably and safely.

Robotics companies need AI that can manipulate objects, navigate spaces, and complete tasks without constant human supervision. Developing this is expensive and time-consuming. Kinitro offers an alternative: define your challenge, set your metrics, and let a decentralized network compete to solve it.

For the broader Bittensor ecosystem, Kinitro demonstrates how subnets can target specific industries. While some subnets aim for general AI capabilities, Kinitro focuses narrowly on embodied intelligence. This specialization makes it easier to measure value; either the AI can control the robot successfully, or it can’t.

The public nature of the competitions also creates a knowledge commons. Techniques that work get observed and built upon. The whole field of embodied AI benefits when breakthroughs are visible rather than locked behind corporate walls.

Looking Ahead

Kinitro’s roadmap for v0.2 in February 2026 includes training-based evaluations, new competition environments, and various technical improvements. The team has teased upcoming challenges that may involve humanoid robots, aligning with the broader robotics trends at CES 2026, where humanoid robots for home use were prominently featured.

The subnet is actively seeking partnerships with companies and research labs that have custom robotics challenges. They offer bounties and revenue sharing for successful client introductions, turning the community into a business development network.

As humanoid robots, drones, and other embodied AI systems become more common, the demand for reliable control algorithms will grow. Kinitro is positioning itself to be the platform where those algorithms get developed through competition and decentralized innovation.

For anyone interested in robotics AI, Kinitro offers a clear path to contribution: build an agent that performs well in their challenges, earn TAO rewards, and potentially see your approach deployed in real systems. All the code is open-source at github.com/Kinitro, documentation is at kinitro.ai/docs, and the community is active on Discord.

Be the first to comment