By: Eyad Gomaa, CEO @TroyQuasar || Youssef Farahat, CTO @Farahatyoussef0

The Long Memory Problem

For most people, artificial intelligence feels impressive but oddly forgetful. You can paste in a document, ask a few good questions, and then suddenly the system starts to lose the thread. Ask it to remember too much and it either slows down, becomes expensive, or simply breaks.

For Eyad Gomaa and Youssef Farahat, that limitation was not just frustrating. It was existential.

“Imagine an intelligence capable of solving something as complex as cancer,” Eyad says, “but it can only remember a tiny fraction of what it knows at once.” In his view, that is not intelligence at all. It is brilliance locked in a box.

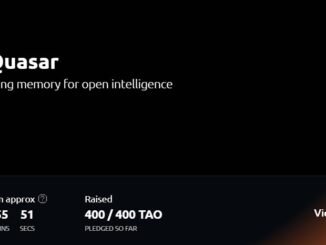

This idea sits at the heart of SILX AI’s new Quasar model built to crush the long-context barrier bottleneck.

Founders Shaped by Code, Early

Eyad learned to code when he was eleven. At the time, it was not about artificial intelligence or grand visions of the future. It was about building things that felt magical. Small apps, simple websites, tools that impressed friends and family.

But something shifted as he grew older. He began to see how a few lines of code could quietly change the way people worked, learned, and lived. Software stopped being a hobby and started to feel like responsibility.

Now eighteen, Eyad speaks with the calm intensity of someone who has been thinking about the same problem for years. His path led him deep into neural networks, the mathematical systems that sit beneath modern AI. Before transformers became dominant, he worked with older models that processed information one piece at a time, slowly and sequentially.

Transformers promised a breakthrough by processing many pieces of information at once. Yet even they, Eyad realised, were still constrained by memory.

Why AI Forgets

To understand what SILX is building, it helps to think of AI memory like a desk. Most models work with a very small desk space. You can spread out a few pages and reason well, but if you try to place an entire library on the desk, things collapse.

This desk space is called the context window. It defines how much information an AI model can hold in its “working memory” at any one time.

Today, most systems rely on shortcuts to cope. They summarise information, retrieve fragments, or shuffle data in and out. These tricks work occasionally, but accuracy suffers. Important details get lost.

Eyad saw this as a fundamental architectural problem rather than a surface-level one.

Breaking the Architecture, Not Polishing It

Many AI teams try to improve memory by optimising existing systems. SILX chose a more radical path.

Eyad and Youssef’s research led them to a surprising conclusion. The problem was not only how AI pays attention to information, but how it keeps track of where information sits in a sequence. Most models rely on something called position embeddings, which are essentially labels telling the AI where each word belongs.

Those labels turn out to be fragile. Train a model to handle a certain length of text, and it will fail if you exceed it, even if the underlying intelligence is capable of more.

SILX removes that limitation entirely. Their approach eliminates position embeddings altogether, allowing models to process vastly more information without collapsing under their own structure.

In practical terms, this means feeding an AI entire books, codebases, or personal archives and still being able to ask precise questions. Not summaries. Not fragments. The whole thing.

From Personal Frustration to Shared Mission

SILX co-founder Yousef Farahat comes at the problem from a different angle. As a researcher who frequently runs AI models locally, Yousef was repeatedly blocked by arbitrary limits. Files had to be split. Context had to be trimmed. The system worked against him.

Together, they shared a simple belief. If AI is meant to help people think, it should not forget what it has already seen.

This belief extends beyond convenience. In his view, long-term memory is essential for any serious form of artificial intelligence. A system that cannot hold context cannot reason deeply, learn continuously, or work autonomously for long periods of time.

Open Source as a Moral Choice

SILX is not just a technical project. It is also a philosophical one.

Eyad speaks openly about the widening gap between closed, corporate AI systems and the open-source community. Large companies can afford vast computing resources. Independent developers cannot. Without structural change, open systems will always trail behind.

By enabling massive memory scaling for any open-source model, SILX aims to close that gap. Their technology can be applied beyond their own models, giving developers a way to build more capable systems without surrendering control.

In plain terms, SILX wants AI that works for people, not against them. Systems you can run locally. Systems that do not charge more simply because they remember more. Systems that stay with you rather than extracting value from you.

What Success Looks Like

Looking ahead, Eyad imagines AI agents that can work continuously without degrading. Systems that can research problems over days or weeks without losing coherence. Tools that feel less like chatbots and more like collaborators.

For everyday users, the change is simpler but just as powerful. You can give an AI your files, your notes, your books, and talk to it naturally without experiencing signal lock or memory reset. The intelligence stays present.

Reclaiming the Narrative

Eyad rejects the idea that AI is here to replace people. He sees it as amplification rather than substitution. A tool that removes friction and expands possibility.

“AI is coming whether we like it or not,” he says. “The real question is whether it comes closed and extractive, or open and working for you.”

SILX is the answer to that question.

Not as a finished solution, but as infrastructure. A foundation that allows intelligence to remember, reflect, and stay present long enough to matter.

In a world rushing toward faster answers, SILX is quietly solving the deeper problem. How to let machines remember what they already know, so humans can go further than before.

Discover how SILX AI are solving the deeper problem of AI – follow @QuasarModels on X and help co-create the future.

Be the first to comment