Targon, operating as Subnet 4 on Bittensor, stands out as a high-speed, confidential compute cloud designed for secure AI inference and model hosting. Built by Manifold Labs, Targon leverages technologies like Confidential Compute (CC) and Protected PCIe (PPCIE) to ensure data privacy and verifiable operations, making it a foundational layer for other subnets to build upon.

By providing scalable, low-cost GPU resources and deterministic verification, Targon incentivizes miners to deliver fast, accurate AI predictions, creating a marketplace where compute becomes a commoditized resource. This has sparked a wave of collaborations, where subnets “stack” on Targon to multiply efficiencies, reduce costs, and accelerate real-world adoption.

Key Collaborations on Targon

Targon’s appeal lies in its ability to handle massive workloads at fractions of centralized costs, drawing integrations from specialized subnets and even external tools. These partnerships exemplify the “subnets using subnets” mantra. Let’s explore:

Dippy AI (Subnet 11)

Dippy AI, an established roleplay companion app with 8 million users, relies on Targon for backend AI powering conversational and personalized experiences. Dippy also reported that Targon processes ~50% of their text inference. This shift from centralized providers to Targon’s decentralized infrastructure demonstrates enterprise-grade reliability, with Targon handling inference at speeds and costs that outpace traditional clouds.

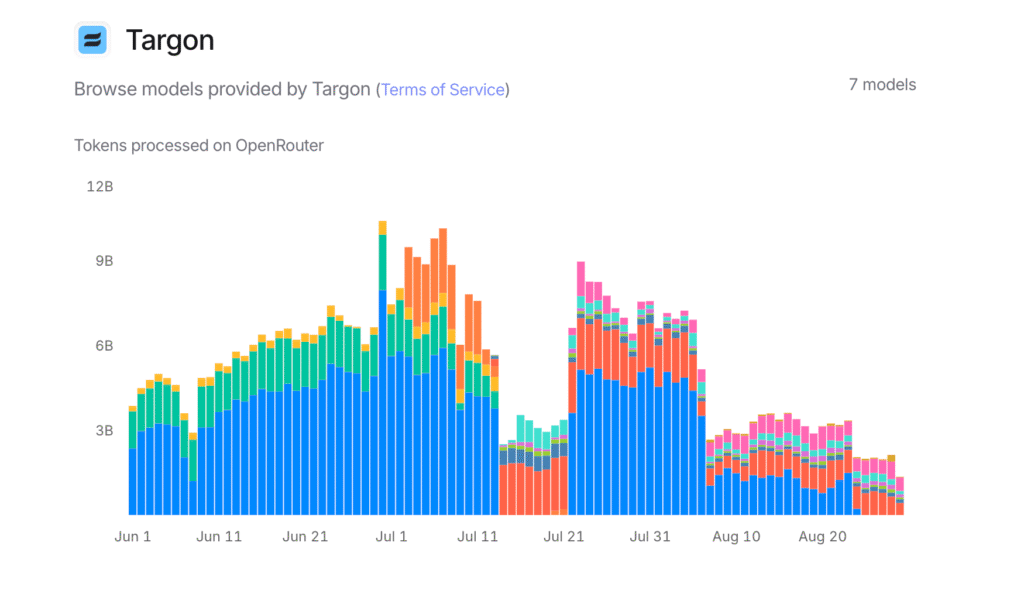

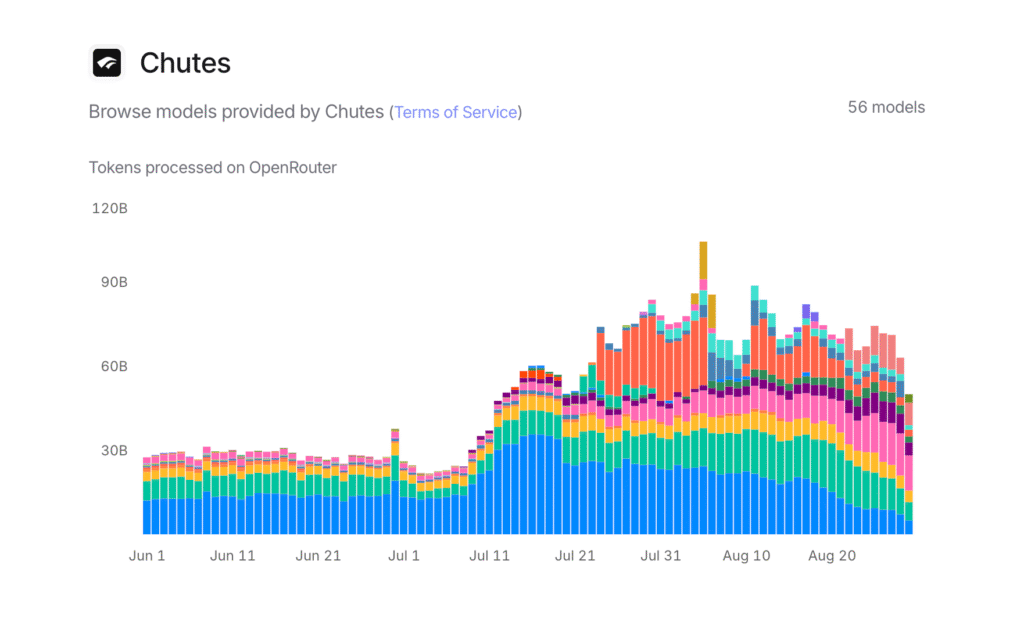

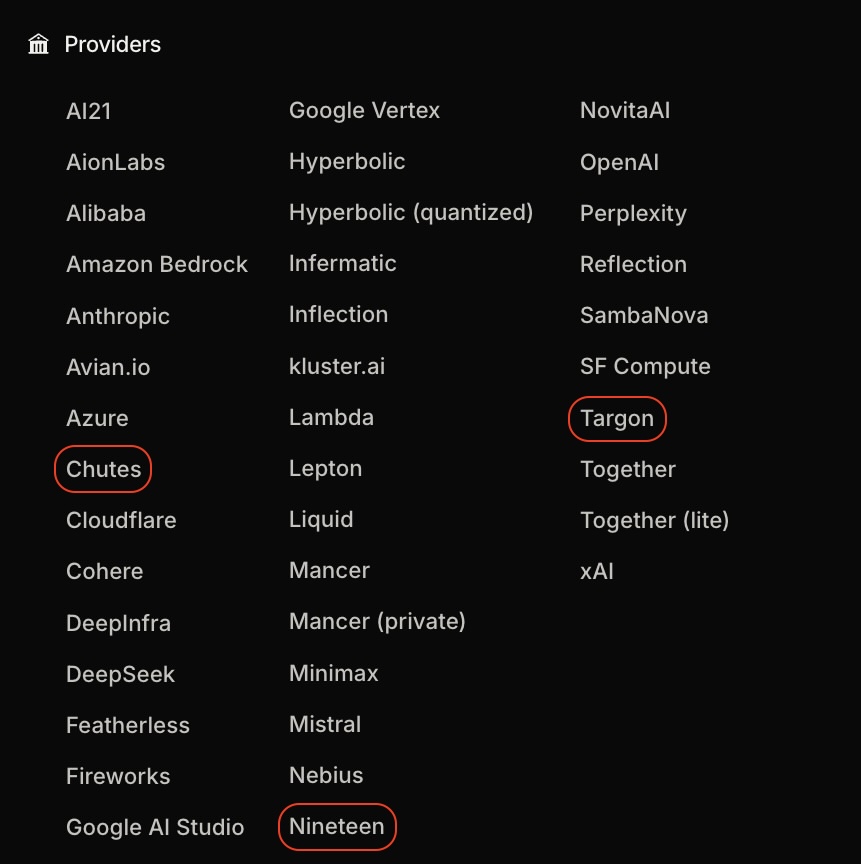

Chutes (Subnet 64) and Nineteen (Subnet 19): Enhanced Inference via OpenRouter Integration

Targon, alongside Chutes (SN64), contributes to delivering some of the world’s lowest-cost inference for top open LLMs, with these subnets collectively serving over 1 trillion tokens monthly via platforms like OpenRouter. This presence on OpenRouter allows users to leverage Targon’s confidential compute in tandem with Chutes’ optimizations, facilitating massive-scale tasks at up to 1/200th the cost of competitors.

Similarly, Nineteen (SN19) is available through OpenRouter for decentralized inference in LLM and image generation. These OpenRouter integrations not only reduce latency and expenses but also enable shared access to innovations.

Broader Integrations: Sybil.com

Targon’s influence extends to external tools and products. For instance, it’s integrated into Sybil.com, an AI-powered search engine that uses Targon’s infrastructure for secure, decentralized queries. Revenue-sharing mechanisms from Targon, such as buybacks of SN4 alpha tokens, ensure that these integrations translate to tangible rewards for participants.

The Impact of Targon: A Multiplier for Bittensor’s Growth

These collaborations illustrate Targon’s role as one of Bittensor’s “growth engine,” where subnets stack to create compounding efficiencies. By decentralizing compute, Targon lowers barriers for AI builders, outpacing Web2 incumbents in speed, cost, and security.

As Bittensor expands these integrations positions Targon as a cornerstone, driving TAO’s value through real utility and ecosystem synergy. This also signals a maturing network where collaboration isn’t just encouraged—it’s economically inevitable.

Be the first to comment