In January 2024, a finance worker at Arup joined what looked like a routine meeting with the company’s CFO and other executives. Everything seemed normal: the faces, the voices, even the small talk. By the end of that call, he had wired $25 million to fraudsters. Every participant on the screen had been a deepfake.

That’s not an isolated story.

In 2025 alone, deepfake-related fraud has already cost nearly $900 million worldwide — a figure that’s still climbing. Fake celebrity endorsements have drained over $400 million from victims. Impersonations of executives, like Arup’s case, account for $217 million. Romance scams, fraudulent loans, biometric bypasses — the numbers are multiplying fast.

We’re witnessing the collapse of the idea that “seeing is believing.” And the truth is, the tools that got us here — the same AI engines that can spin words into worlds — are also the only tools that might save us.

When AI Turns Against Itself

OpenAI’s Sora 2, a video-generation platform that lets users insert themselves into any scene, dropped this week. Within hours, users were creating fake historical clips, celebrity deepfakes, and uncanny forgeries of real events.

We’re sliding toward an internet where authenticity is optional — where fake bodycam footage, synthetic news broadcasts, and imitation influencers flood every feed.

So the question becomes “What if the only thing that can stop a deepfake is a better deepfake?”

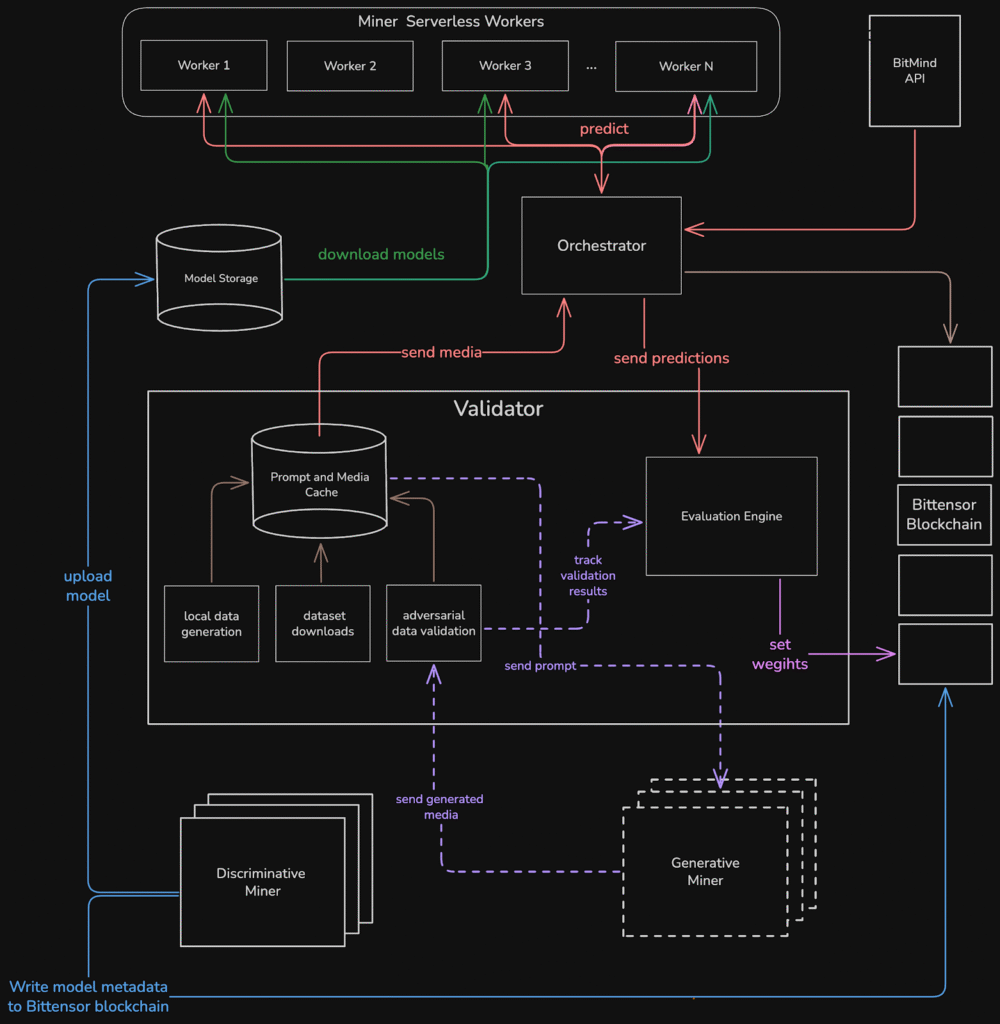

That’s the philosophy behind BitMind’s GAS — the Generative Adversarial Subnet — a decentralized AI system built on Bittensor’s Subnet 34 that turns the same generative arms race responsible for fake media into a global defense mechanism.

How BitMind’s GAS Works

At its core, GAS mirrors the biological concept of an arms race — predator and prey evolving in lockstep.

In traditional GANs (Generative Adversarial Networks), one AI generates fakes while another tries to detect them.

Over time, both improve. BitMind has flipped that concept into an open, blockchain-based competition where thousands of participants help each other get stronger — not through trust, but through rivalry.

Here’s how it’s structured:

1. Discriminative Miners

They build AI models that detect deepfakes. The better their accuracy, the more they earn.

2. Generative Miners

These miners create convincing synthetic content designed to fool the detectors. When they succeed, it exposes weaknesses that strengthen the system.

3. Validators

The validators act as referees, scoring submissions and mixing real and synthetic data to ensure fair evaluation.

No NDAs, no private datasets and no walled gardens. Just a permissionless, global contest where innovation happens in the open — and the best ideas rise naturally.

Turning Detection Into a Decentralized Economy

Unlike centralized systems hidden behind corporate APIs, GAS operates transparently. Every submission, model, and metric is auditable on-chain.

The subnet is incentive-driven, not corporate-controlled:

1. Models are rewarded for performance, not pedigree.

2. Accuracy is proven, not promised.

3. Participation is open to anyone with GPUs and ideas — no investor introductions required.

BitMind’s team has already achieved 98% accuracy detecting content from major generators like SDXL and RealVis, maintaining over 90% accuracy on real images from Google’s ImageNet.

That said, the system’s honesty is refreshing: on new, out-of-distribution data (like MidJourney), accuracy drops to around 42%. Instead of hiding that, BitMind publishes it. Transparency, after all, is the first step toward progress.

Scaling Trust Without Centralization

One of the hardest problems in AI safety is scale. Traditional detection models can’t keep up — they’re costly to run, slow to update, and locked behind company firewalls. BitMind’s architecture fixes that.

Instead of running detection inference directly on user data, miners submit models, not outputs.

BitMind then deploys those models across secure infrastructure, allowing it to:

1. Process detections safely (without exposing sensitive media).

2. Scale horizontally without burning miner compute.

3. Aggregate top-performing models into a “meta-detector” that continuously learns from the competition.

This makes it enterprise-ready — a decentralized backend with centralized reliability.

How BitMind Trains Its AI Immune System

Each training cycle is a stress test of creativity and deception. Validators generate challenges by:

1. Captioning real images.

2. Enriching them with LLMs for context.

3. Producing synthetic variants using 15 different generators (Stable Diffusion, Hunyuan Video, Mochi-1, and more).

The system mixes these fakes with authentic images and videos, layering distortions — color shifts, compression artifacts, and motion blur — to mimic real-world conditions.

BitMind also integrates uncertainty quantification, meaning the AI can say not just “this is fake,” but also “this is fake, and I’m 95% confident.”

It’s a crucial step toward trust — a system that admits when it’s unsure instead of bluffing.

Open Competition as a Defense Strategy

If you’ve followed Bittensor, you know its magic lies in open incentives. Subnets like BitMind’s GAS thrive because they turn innovation into a public sport — not a secret.

And it’s working. The subnet’s results are already influencing enterprise and academic research circles. Its public leaderboard outpaces many corporate detection systems that have spent years optimizing behind closed doors.

Of course, it’s not without risks. Incentives can be gamed. Closed-source generators like OpenAI’s and Google’s pose blind spots. Regulatory pressure looms.

But as BitMind’s whitepaper puts it, “the only systems that improve are those under attack.”

Why This Matters

Deepfakes aren’t slowing down — they’re accelerating. Traditional research moves too slowly and corporations won’t open their black boxes. Regulation lags years behind.

So the only viable defense is one that evolves at the same speed as the threat.

That’s what GAS represents: an immune system for digital media — one that learns, mutates, and strengthens with every attempt to deceive it.

Toward a Decentralized Immune System for Truth

BitMind’s GAS isn’t perfect. It’s messy, chaotic, and experimental. But so is the world it’s trying to protect.

We’re entering an era where you can’t trust your eyes, where video evidence is negotiable, and where the line between reality and simulation blurs by the hour.

The platforms won’t save us. The regulators are late, the corporations are complicit and it leaves one path — open competition.

Because the best defense against artificial lies isn’t control — it’s chaos.

It’s thousands of miners, validators, and builders turning deception itself into a weapon for truth proves that BitMind’s GAS won’t just stop deepfakes.

It’ll prove something bigger — that decentralized intelligence can defend reality itself.

Be the first to comment