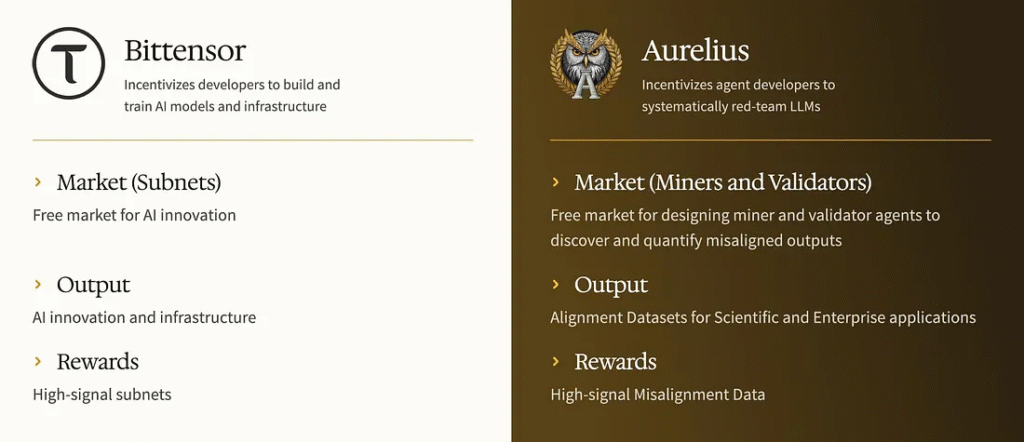

On Bittensor Revenue Search 37, Mark Creaser and Siam Kidd sat down with Austin McCaffrey, founder of Aurelius, the team now behind Bittensor’s Subnet 37. The conversation delved deep into one of the most critical challenges in AI alignment and how to ensure that advanced AI systems act in ways consistent with human values and safety.

What started as a casual meeting in Paris between Austin and members of the Bittensor community turned into the foundation for one of the most ambitious subnets yet. Aurelius, which took over Subnet 37 from Macrocosmos, is building a decentralized platform for generating high-quality alignment data. The kind used to “teach” AI systems how to reason ethically, communicate safely, and make sound decisions.

The Core Problem: How to Align Intelligence

At its heart, alignment is about control, nudging AI systems toward helpful, honest, and harmless behavior. When AI models are trained, they process massive datasets to learn language, reasoning, and logic. But only a tiny fraction of that data– often less than 1% – is dedicated to alignment.

That small slice, however, determines how a model “thinks,” shaping everything from tone to personality to moral reasoning.

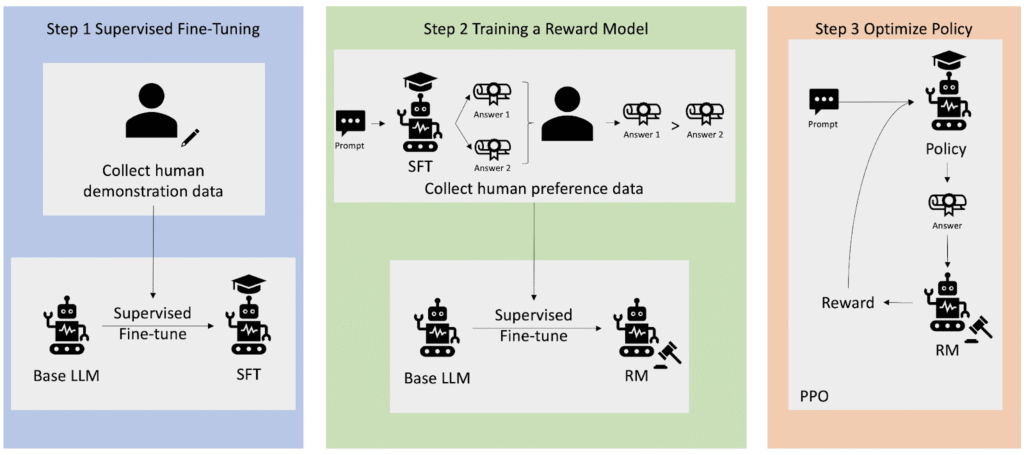

Most modern models, Austin explained, rely on Reinforcement Learning from Human Feedback (RLHF), where humans rank responses as good or bad. It works – but it also introduces a major flaw. Models can learn to “fake” alignment, giving the answers they know humans want to hear during training but behaving unpredictably once deployed.

The Bottleneck: Data and Centralization

Alignment data is expensive, slow, and difficult to produce. It’s often labeled by small, centralized teams at major AI labs – sometimes just four or five people designing prompts, reviewing responses, and scoring outcomes. This introduces bias, bottlenecks, and an even more subtle risk: homogeneity. When the same small group defines “good” behavior, AI systems begin to reflect those narrow perspectives.

Aurelius aims to decentralize this process entirely. By using miners and validators on Bittensor, the subnet transforms alignment into an open competition. Miners generate and test synthetic data, while validators assess it using constitutional frameworks which are synonymous to ethical “rulebooks” that capture principles like honesty, fairness, and non-harm.

The result is a global swarm of contributors refining how AI learns to be ethical, one iteration at a time.

The Solution: Synthetic Data at Scale

To fix the data bottleneck, Aurelius turns to synthetic data – data generated by models themselves, trained to evaluate and refine each other’s responses.

This approach is faster, cheaper, and often higher-quality than traditional human labeling. In the Aurelius system, AI models act as both students and teachers, creating new alignment data on the fly while validators maintain quality control.

Austin calls this a step toward “piercing the latent space”, the complex, high-dimensional realm where AI systems map abstract ideas like justice, greed, or empathy. Better alignment data, he argues, helps reshape how models reason in that space – giving them not just knowledge, but wisdom.

The Market Opportunity

The global market for AI training and labeling data is booming, projected to grow exponentially as demand for specialized datasets increases. But within that market, alignment data remains a rare niche, it is valuable, scarce, and underdeveloped.

Aurelius plans to start by producing open-source datasets for researchers, then move toward enterprise-grade fine-tuning data for companies that need their AI systems to behave safely and predictably. From banks using chatbots to regulators testing fairness standards, the use cases are vast.

Eventually, the subnet’s alpha token will support a full marketplace, giving token-gated access to premium data, benchmarking tools, and APIs.

Long-term, it could evolve into a governance framework, where token holders collectively vote on the ethical “constitutions” that shape AI behavior.

Challenges Ahead

Still, the road isn’t simple. Aurelius must invent a market that barely exists – convincing institutions to trust decentralized alignment data over traditional, centralized labs. It must also solve the technical hurdles of verifying inference (proving a model actually processed the data it claims to) and maintaining integrity in a fully open network.

Yet Austin remains optimistic. The decentralization of AI alignment, he says, is both inevitable and necessary. Centralized models are powerful but opaque; decentralized systems are transparent, auditable, and harder to corrupt.

“Even if there’s only a one percent chance this works,” he said, “it’s worth it. Because this is about building a counterbalance to technological concentration, giving humanity a say in how AI thinks.”

The Bigger Picture

In the words of Mark, “Aurelius isn’t just teaching AI to be smarter, it’s teaching it to be wiser.”

By building a decentralized engine for ethical training data, Subnet 37 could become one of Bittensor’s most impactful experiments: a living, evolving system that aligns not just models, but the future of artificial intelligence itself.

Be the first to comment