DeepSeek AI made waves on December 1, 2025, with the launch of V3.2 and V3.2-Speciale. These aren’t your average chat models as they perform on the same level as big models like GPT-5 and Gemini 3.0 Pro while using a fraction of the computing power. Even better? They’re open-source and dirt cheap to run.

This whole event highlights a big trend in 2025, with AI that’s free for anyone to use (open-source) getting really good, and services like Chutes making it simple for beginners to try without needing powerful computers.

What is DeepSeek?

DeepSeek is a Chinese AI research group focused on cracking what’s called Artficial General Intelligence (AI that can handle almost any task a human can, not just one specific thing). But they do it differently. Instead of spending billions on massive computers, they use clever tricks to make AI smarter for less money.

Their models are known for being:

- Fast

- Inexpensive

- and strong at deep reasoning tasks like math, code, and decision-making.

Over time, DeepSeek has released models like V2, V3, DeepSeekMath, and the popular DeepSeek-OCR, a lightweight tool that reads documents with 97% accuracy. Their biggest innovation is DeepSeek Sparse Attention (DSA), a method that lets the AI scan text quickly and focus only on the most important information. This reduces compute costs by 70–90% compared to larger models.

All their models are MIT-licensed, meaning anyone can download them from Hugging Face, modify or run them freely. Their API pricing sits at $0.28-$0.70 per million tokens, up to 30 times cheaper than rivals like Google’s Gemini.

What’s New in DeepSeek-V3.2

DeepSeek’s December 1 post kicked off with “Launching DeepSeek-V3.2 & DeepSeek-V3.2-Speciale — Reasoning-first models built for agents!” Here’s what dropped:

- DeepSeek-V3.2, a balanced, everyday model that works on their app, website, and still matches GPT-5 performance.

- DeepSeek-V3.2-Speciale, which is a more powerful, API-only version for the tougher tasks with deeper thinking, stronger logic, and benchmark scores that rival closed models like Gemini 3.0 Pro. And,

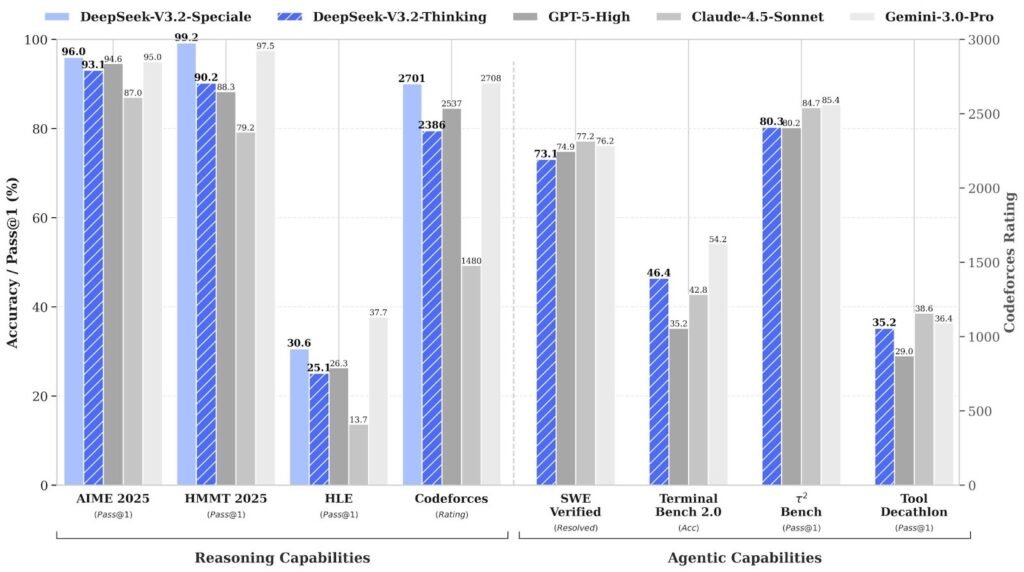

- Benchmark Scores, with Gold medals in the International Math Olympiad (IMO), Chinese Math Olympiad (CMO), and coding competitions like ICPC and IOI 2025. The models hit 97% on AIME math tests (beating GPT-5’s 94.6%), 90% on coding challenges (on LiveCodeBench), and 76.2% on software bug-fixing tasks.

The secret behind these jumps is DeepSeek’s new training pipeline, using training data gotten from 1,800+ environments and 85,000+ complex instructions. It introduces “thinking in tool-use,” where the AI reasons out loud while calling on external tools.

Why This All Matters

AI costs a lot. Google spent over $100 billion on AI in 2025. DeepSeek shows you can build great AI with smart work, not just money.

This closes the gap between free, open-source AI and expensive, secret AI from big companies. The difference went from years to months.

Their models offer:

- high reasoning quality,

- very low compute needs,

This opens the door for students, indie hackers, startups, and researchers to use high-end AI without huge budgets.

But it’s not yet perfect. V3.2 sometimes uses extra tokens (costs more for long tasks). It has bugs, gets stuck repeating itself, and struggles with strict formats. A chess test on December 3 showed it’s smart but makes illegal moves sometimes.

Where Chutes Comes In

Chutes is a platform that lets anyone run AI models without expensive computers (GPUs). It’s “decentralized” (spread across computers worldwide) and “serverless” (Chutes handles everything). Think AWS for AI, but cheaper and community-run.

Right after DeepSeek announced V3.2, AI engineer, Jon Durbin, shared that they deployed the new models on Chutes within hours. This shows how quickly the open-source ecosystem can adapt.

Chutes now supports:

- DeepSeek-V3.2,

- DeepSeek-V3.2-Speciale,

- and older versions like DeepSeek-R1.

It also runs more than 60 other models, including Kimi K2, Mistral, and various image/audio generators.

How DeepSeek Works Inside Chutes

Running DeepSeek on Chutes is simple but powerful:

- Chutes Provides the Compute

No GPU? No problem.

Chutes routes requests to decentralized nodes that keep models “hot” for fast inference.

- DeepSeek’s Efficiency Makes It Affordable

Because DeepSeek uses DSA and lightweight architecture, it runs smoothly on Chutes without needing expensive hardware.

This keeps the cost extremely low for users.

- Instant Deployment

Developers can:

- load the model,

- run a prompt,

- or integrate with their app using an OpenAI-compatible API. No setup, no servers, no GPU tuning.

- Perfect for Agents

DeepSeek models are built for reasoning steps and tool-use.

Chutes supports long contexts and persistent sessions, making it a great environment for:

- research agents,

- coding assistants,

- data analyzers,

- and autonomous workflows.

Together, DeepSeek’s reasoning + Chutes’ compute layer creates an ecosystem where anyone can build advanced agent systems with minimal cost

What’s Next?

DeepSeek and Chutes represent a new era where AI becomes:

- open,

- efficient,

- and accessible to everyone, not just large tech companies.

DeepSeek pushes model intelligence forward.

Chutes removes the hardware barrier, and adds training features and apps.

The result is a global ecosystem where powerful AI becomes a common tool for learning, building, and creating.

Explore:

- DeepSeek at deepseek.com,

- Download at huggingface.co/deepseek-ai

- Try Chutes at chutes.ai

- Follow: @deepseek_ai, @jon_durbin, @chutes_ai on X

Be the first to comment