Covenant AI has announced a major milestone with the launch of Covenant72B, its first large-scale 72-billion parameter decentralized AI training run. The initiative represents a leap forward for both the team and the broader vision of open, distributed AI development on the Bittensor network.

What Is Covenant AI?

Covenant AI is building the foundation for decentralized, large-scale AI training through a trio of interconnected platforms running on Bittensor:

a. Templar, on Bittensor Subnet 3— decentralized pre-training

b. Basilica, on Bittensor Subnet 39— decentralized compute infrastructure

c. Grail, on Bittensor Subnet 81— decentralized reinforcement learning post-training

These platforms aim to replace the centralized data centers of traditional AI labs with permissionless, globally distributed participation, allowing anyone with sufficient compute to contribute to training models at scale.

Inside the Templar System

Templar, Covenant’s flagship pre-training platform, coordinates contributors around the world using novel algorithms that solve one of decentralized AI’s toughest challenges — communication efficiency.

Through innovations like SparseLoCo, a communication-efficient optimizer, and Gauntlet, a blockchain-based incentive mechanism, Templar enables transparent, auditable, and reward-driven collaboration among participants.

Participants can join freely by creating an object storage bucket and posting access keys on-chain. This ensures all contributions are traceable, verifiable, and rewarded based on performance rather than privilege.

What Is Covenant72B?

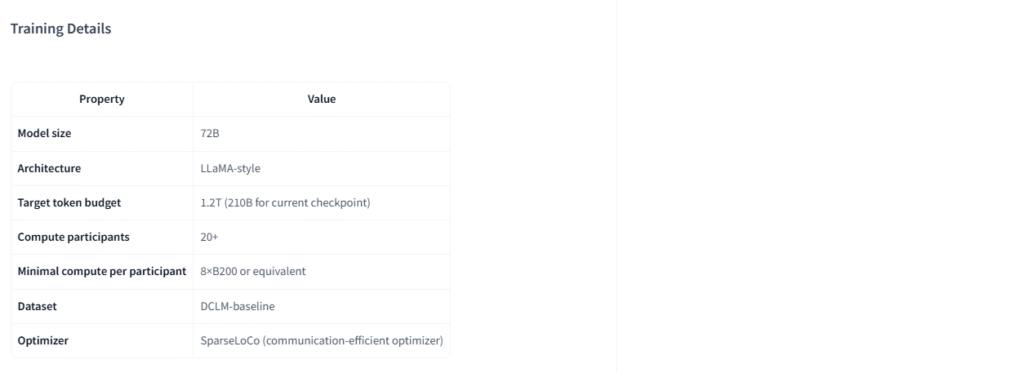

Covenant72B is Covenant AI’s first massive-scale, decentralized pre-training experiment, featuring:

a. 72 billion parameter model size

b. LLaMA-style architecture

c. 1.2 trillion token training dataset

d. 20+ global compute node participants

e. SparseLoCo optimizer

Launched on September 12, this training run demonstrates that permissionless, decentralized pre-training can rival the performance of centralized AI labs — at a fraction of the cost and with complete transparency.

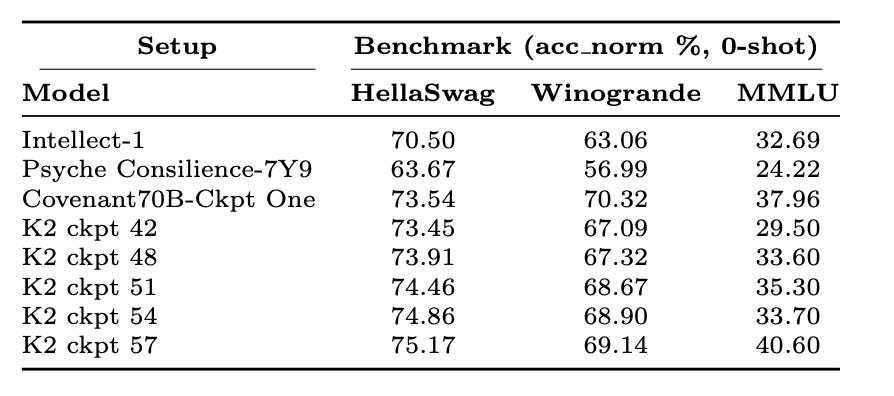

Performance and Early Results

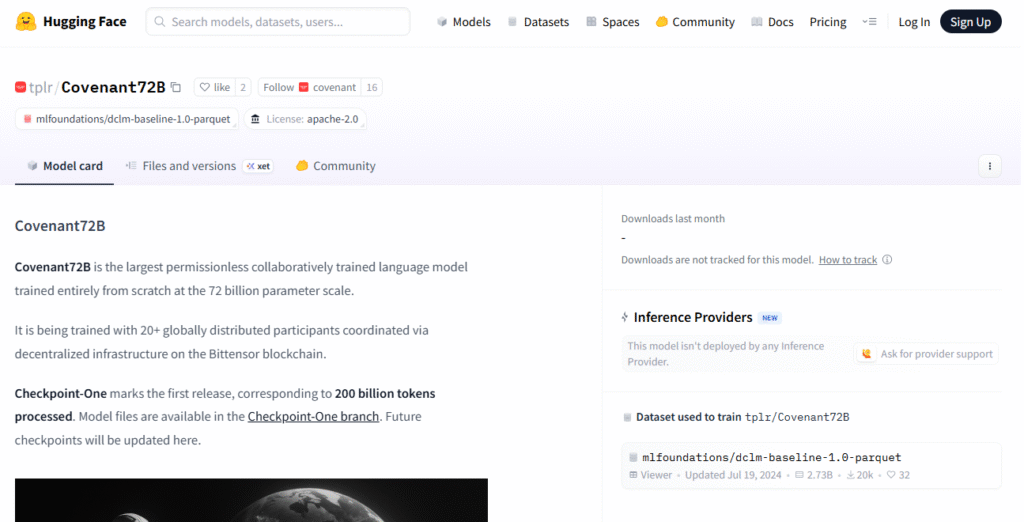

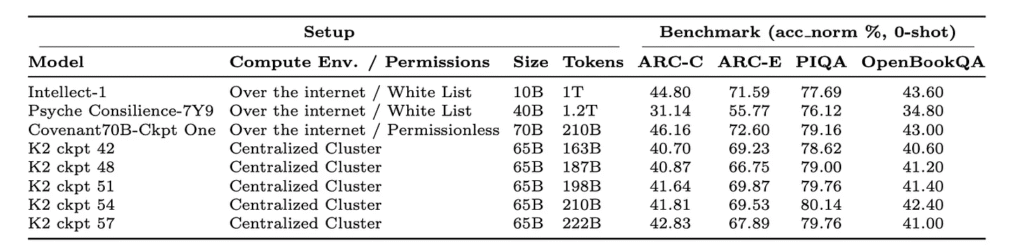

Early results from Checkpoint One (now live on Hugging Face) show that Covenant72B already outperforms previous decentralized pre-training attempts across all benchmarks.

When compared to K2, a similarly sized model trained in a centralized data center, Covenant72B holds competitive ground — even surpassing it on several metrics like ARC-C and ARC-E.

This parity with centralized systems marks a historic validation of decentralized AI training.

Overcoming Technical Challenges

Like any large-scale run, Covenant72B faced hurdles such as validator stability and peer synchronization. The team responded with system upgrades, better reward calibration, and improved validator redundancy to maintain seamless operation.

Despite the model’s scale, Covenant’s communication overhead remains impressively low — just 6%, proving that large-scale distributed training can be both efficient and reliable.

What’s Next?

The team plans to continue releasing checkpoints and performance data throughout the training cycle. Upcoming initiatives include:

a. Speed optimizations to reduce known bottlenecks

b. Expanded participation, allowing more decentralized miners to contribute

c. Integration with Basilica and Grail, completing the decentralized AI pipeline from pre-training to post-training

Why It Matters

Covenant72B proves that open, decentralized collaboration can produce AI models competitive with the world’s largest centralized labs.

By leveraging Bittensor’s blockchain to coordinate incentives and infrastructure, Covenant is paving the way for a global, permissionless AI economy — one that is transparent, auditable, and owned by the people who build it.

Resources

To explore Templar, stay connected on official channels via:

Website: https://www.tplr.ai/

X (Formerly Twitter): https://x.com/tplr_ai

GitHub: https://github.com/tplr-ai/templar

Discord: https://discord.gg/N5xgygBJ9r

Substack: https://substack.com/@templarresearch

Be the first to comment