In 2020, training a frontier AI model cost roughly $5 million, and by the year 2023, that number had climbed toward $50 to $100 million.

Today, estimates range from $500 million to over $1 billion.

The compounding curve tells a clear story. Artificial Intelligence is not just improving; it is concentrating.

A small cluster of labs, including OpenAI, Google, Anthropic, Meta, and Microsoft, increasingly control the models, the data pipelines, the compute infrastructure, and the economic upside.

For investors, access is limited. For developers, dependency is structural, and for startups, margins compress upward.

Khala Research frames this as the concentration problem of AI; their proposed counterweight is not another lab.

It is a market.

The Core Idea: Intelligence as an Open Commodity

Bittensor is structured as a decentralized marketplace for AI services.

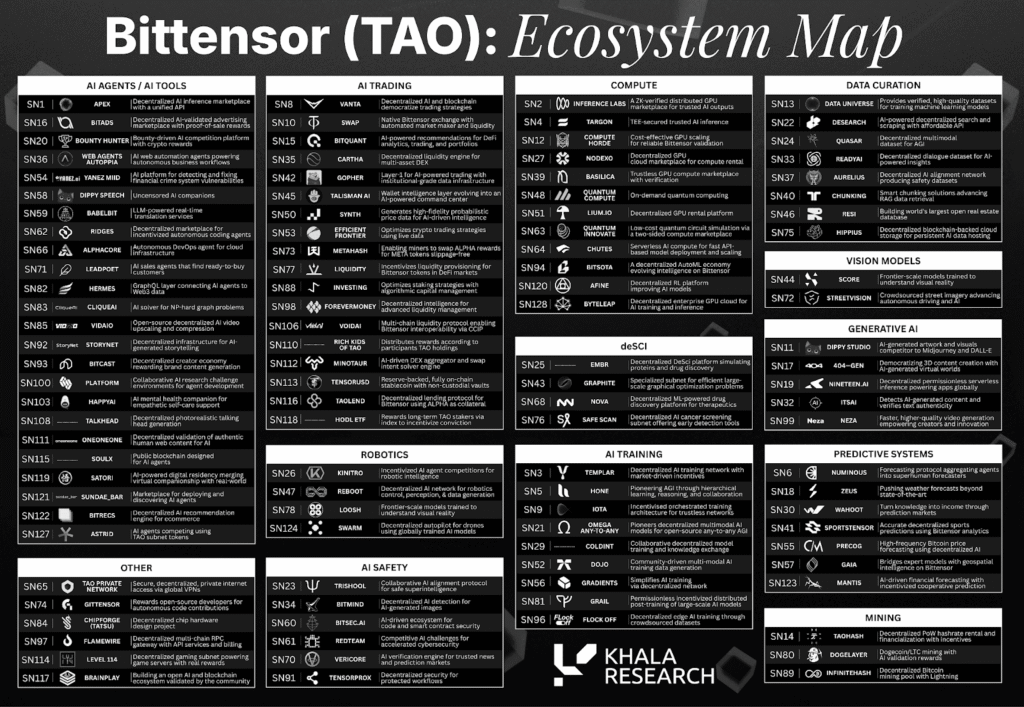

Instead of one organization training one model, the network consists of 128 independent subnets. Each subnet is a competitive arena where miners produce AI outputs and are rewarded in $TAO based on performance quality.

Khala Research describes this as “the Intelligence Olympics.”

The structure rests on three principles:

a. Permissionless Participation: Anyone can contribute if they produce value,

b. Continuous Competition: Underperformers lose emissions to better performers, and

c. Token-Based Capital Efficiency: Growth does not require traditional venture fundraising.

In theory, this is where crypto excels, incentive coordination without gatekeepers. The question was always whether it would translate into real infrastructure.

Over the past 12 months, the ecosystem appears to have crossed an important threshold.

From Experiment to Commercial Traction

According to Khala Research, several indicators suggest maturation:

a. Fiat Revenue at Scale: Chutes (Subnet 64) running above $5.5 million annualized,

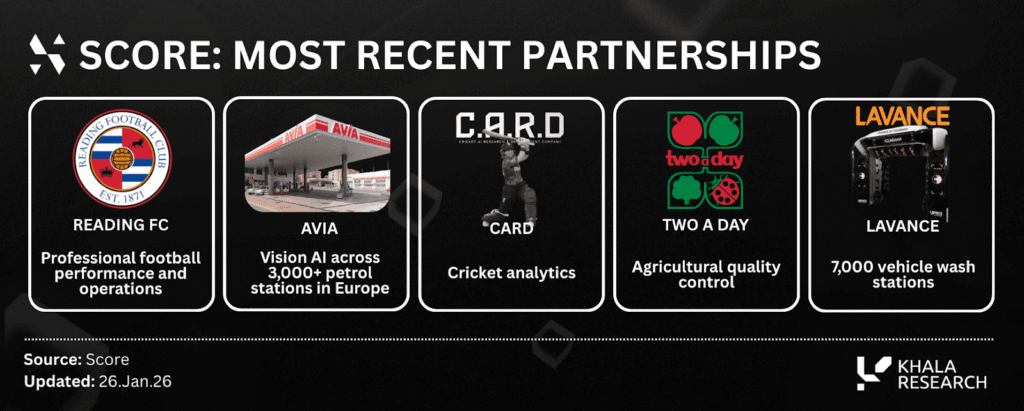

b. Enterprise Contracts: Deployments with Reading FC, 3,000+ AVIA petrol stations, and large payments firms under NDA,

c. Institutional Capital Participation: Trust products and subnet focused funds entering the ecosystem,

d. Academic Validation: Research from Templar (Subnet 3) accepted at NeurIPS OPT2025 (Optimization for Machine Learning), and

e. Technical Milestones: The largest permissionless pre-training run conducted to date.

The key shift is simple: Real customers are paying real money.

That materially changes the risk profile from theoretical coordination experiment to early stage AI infrastructure.

Understanding the Subnet Economy

If Bittensor is the Olympics, subnets are the events.

Each subnet operates independently, with its own miners, validators, incentives, and market focus. They generally fall into three layers of the AI-value chain:

1. Pre-Training: Building foundation models from scratch,

2. Inference: Serving trained models in production environments, and

3. Specialized Applications: Targeted verticals such as computer vision, compliance, or drug discovery.

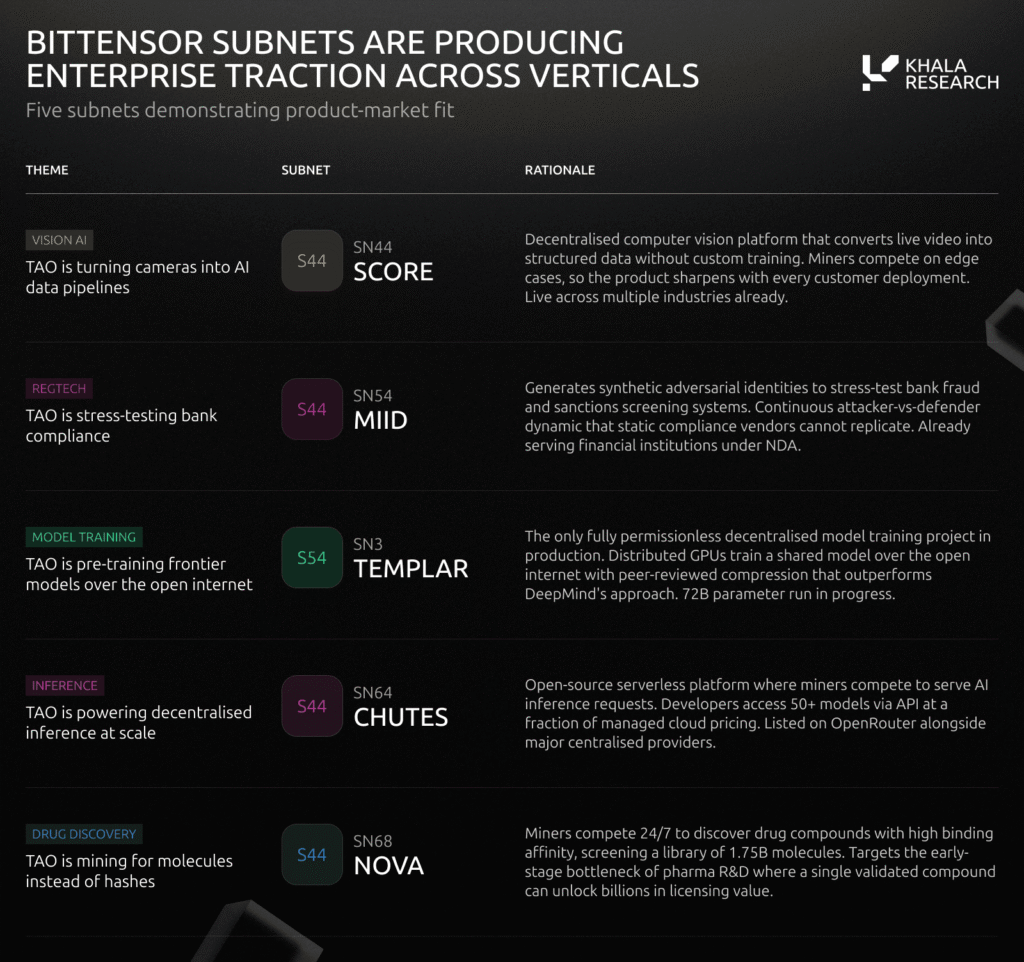

Khala Research profiles five subnets across these layers to illustrate the breadth of the ecosystem.

Five Subnets, Five Markets

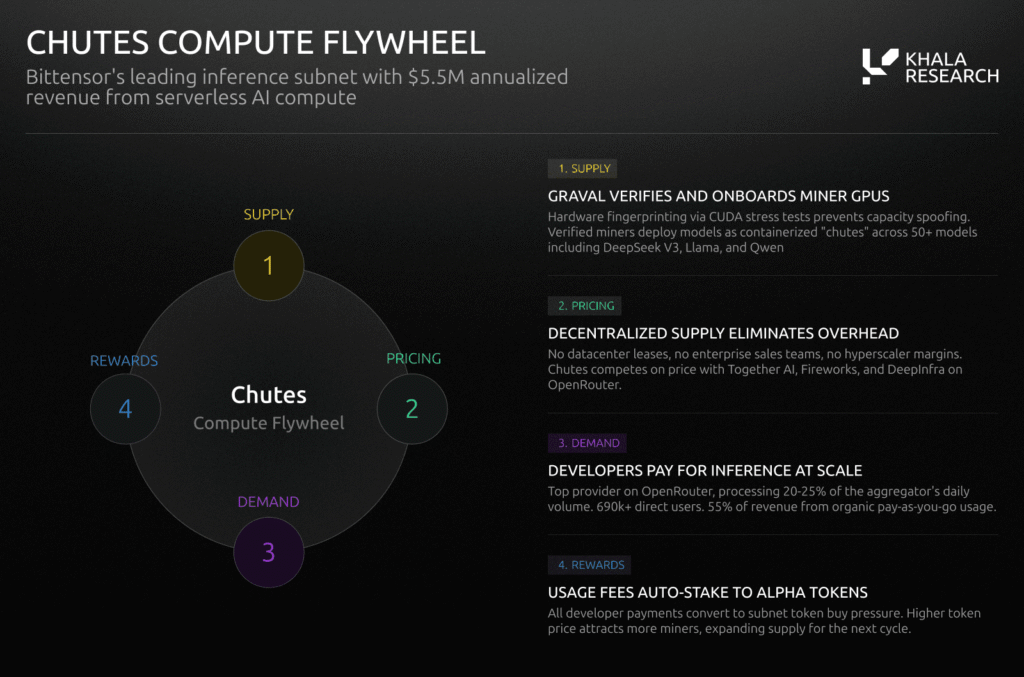

1. Chutes (Subnet 64): Decentralized Inference at Scale

Chutes converts decentralized GPU (Graphics Processing Unit) supply into production AI inference. It processes approximately 120 billion tokens per day and has handled more than 34 trillion tokens lifetime.

It is also a top provider on OpenRouter, a major LLM (large Language Model) API (Application Programming Interface) aggregator.

Key dynamics:

a. 75% of revenue is reported as organic usage,

b. PAYG (Pay-As-You-Go) dominates over subscription or bounty models, and

c. Transparent revenue dashboards signal measurable traction.

The structural differentiator lies in supply-side economics. $TAO emissions subsidize miners, creating a cost floor centralized startups must fund through venture capital.

The open question is sustainability: As sponsored demand declines, can organic revenue continue compounding?

2. SCORE (Subnet 44): Vision AI Across Industries

SCORE focuses on converting video feeds into structured data and has partnered with companies such as Reading FC, AVIA and Lavance.

Unlike centralized platforms that require proprietary hardware or expensive cloud compute, SCORE routes edge cases to decentralized miners. Each new deployment generates new edge scenarios, which sharpen the network’s capabilities.

Reported advantages include:

a. 10 to 100 times cost reduction versus traditional providers,

b. Multi-vertical deployment across sports analytics and fuel stations, and

c. Continuous model improvement driven by competitive incentives.

Computer vision remains fragmented, and SCORE’s bet is that incentive-aligned iteration outpaces static enterprise tooling.

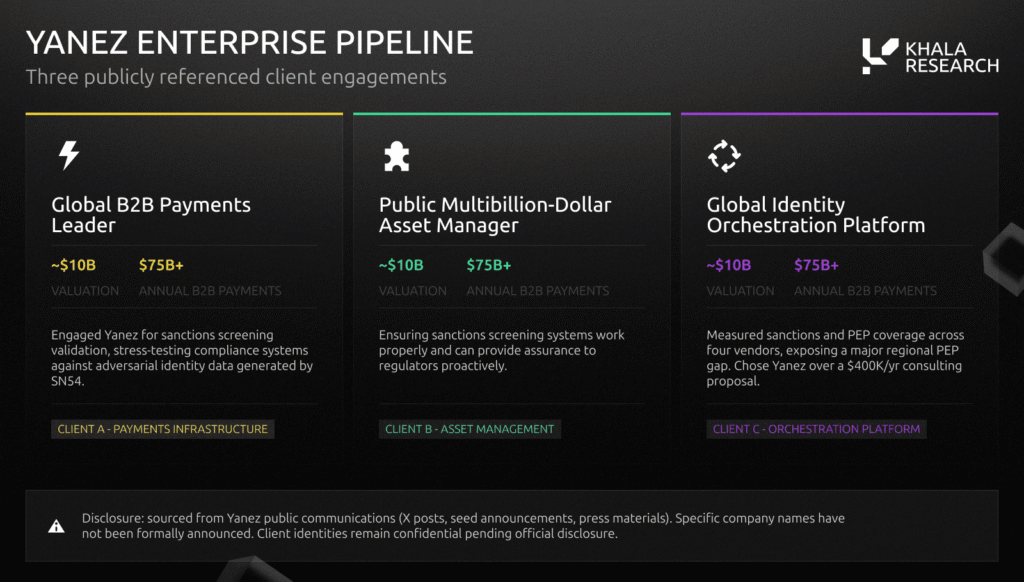

3. Yanez MIID (Subnet 54): Adversarial Compliance Testing

Financial institutions spend billions on KYC (Know Your Customer) and AML (Anti-Money Laundering) systems. Most rely on periodically updated datasets from centralized vendors.

MIID reverses the paradigm.

Miners generate synthetic identities and adversarial scenarios designed to stress test real-world compliance systems. Validators reward submissions based on how effectively they expose weaknesses.

This creates a decentralized red team for financial crime prevention.

Compliance budgets are typically recession resistant. If MIID converts its pipeline into durable contracts, it taps into one of the stickiest segments of enterprise software.

Risks remain. Enterprise sales cycles are long, and named client disclosures are limited.

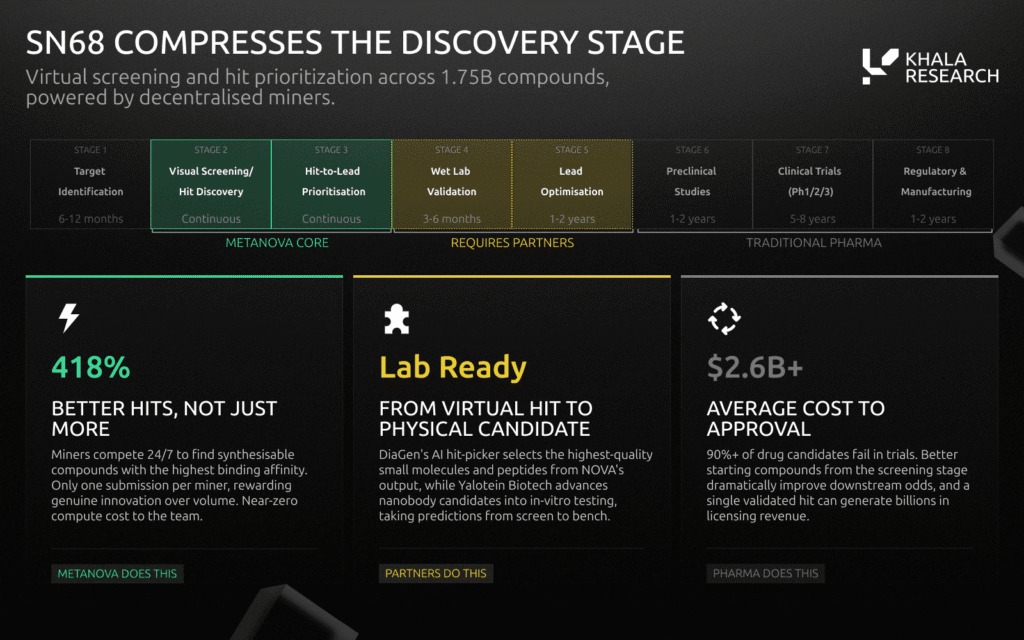

4. NOVA (Subnet 68): Mining for Molecules

NOVA crowdsources molecular design through competitive modeling. It runs two tracks:

a. Compound challenges for candidate molecules, and

b. Blueprint challenges for search algorithms.

Reported improvements show a 418% increase in hit quality over baseline models.

Drug discovery is asymmetric. A single validated compound can justify years of R&D (Research and Development).

However, validation risk is substantial and requires physical lab testing.

NOVA represents the highest variance opportunity within the ecosystem.

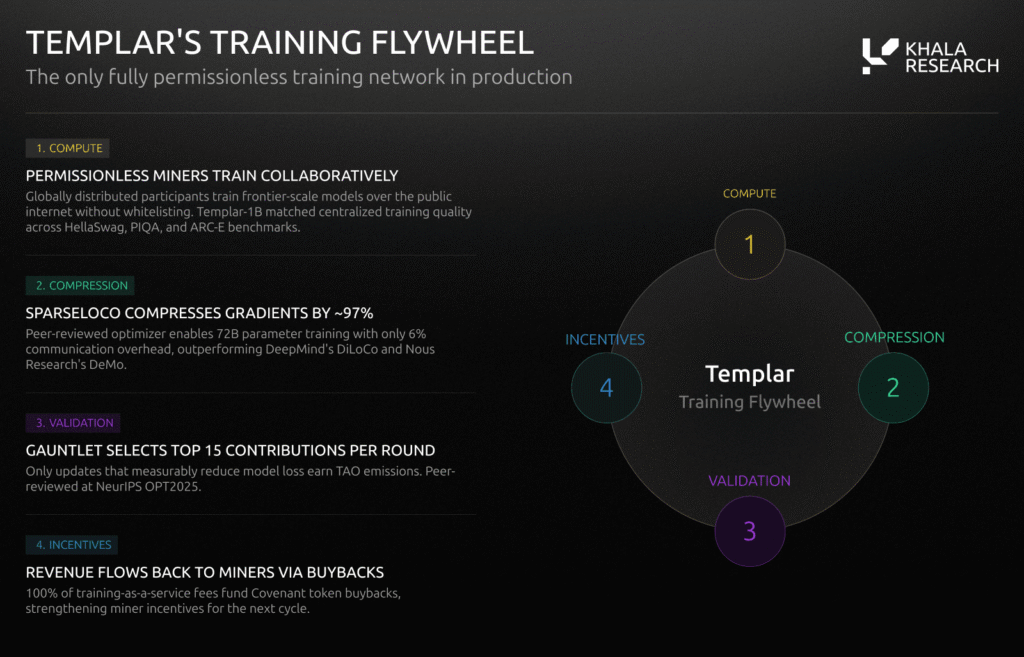

5. Templar (Subnet 3): Frontier Training Without Frontier Budgets

Templar challenges centralized frontier labs by coordinating global compute permissionlessly. Its key innovation, SparseLoCo (which was accepted at NeurIPS), compresses gradient communication by approximately 97%, enabling large-scale collaborative training over the public internet.

Current decentralized training capacity remains significantly smaller than frontier data centers. However, decentralized compute growth rates have outpaced centralized infrastructure in recent years.

If Covenant 72B benchmarks match centralized baselines at equivalent scale, it would validate decentralized pre-training as viable infrastructure.

Catalysts and Risks

Khala Research outlines several forward indicators.

1. Catalysts to Watch

a. Organic revenue growth in Chutes,

b. Contract renewals and ARR disclosures from SCORE and MIID,

c. Final benchmarks from Templar’s 72B run,

d. Wet lab validation for NOVA, and

e. Continued institutional capital flows.

2. Risks to Monitor

a. Competitive response from centralized AI providers,

b. Validator concentration within key subnets,

c. Revenue transparency and durability, and

d. $TAO supply dynamics relative to subnet expansion.

The opportunity rests on whether tokenized competition compounds faster than centralized capital.

The Valuation Gap

Centralized AI labs collectively command valuations exceeding $1 trillion. OpenAI alone has been valued near $500 billion in private markets.

By contrast, $TAO’s fully diluted valuation sits at a fraction of that.

The investment thesis is no longer whether decentralized coordination can function at all. The five subnets profiled are generating revenue, signing contracts, or publishing peer-reviewed research.

The question has shifted: How much of the AI infrastructure market can open competition capture?

Closing Reflection

A decade ago, few believed open source software could rival enterprise incumbents. Yet it did, not by replacing them overnight, but by compounding quality in public.

Bittensor applies that same logic to intelligence itself. Like, if AI becomes the defining infrastructure layer of the next decade, the architecture that coordinates its production will matter as much as the models themselves.

Khala Research’s framing is direct. This is not merely a token, it is an experiment in turning intelligence into a competitively priced, cryptographically verified commodity.

The “Olympics” have begun. Now, the market decides who wins.

Be the first to comment