One of the hardest problems in decentralized AI is not generation, it is evaluation.

As AI systems move into open-ended domains like reasoning, creativity, and agentic behavior, judging quality becomes subjective, expensive, and difficult to verify on-chain. Traditional approaches rely on handcrafted metrics, spot checks, or delayed outcomes. All of them struggle at scale.

New research emerging from Macrocosmos’ Apex (grounded on Bittensor’s Subnet 1) proposes a fundamentally different approach. Instead of trying to define quality explicitly, it lets quality emerge through competition.

The result is Generative Adversarial Mining, or GAM, a mechanism that could reshape how decentralized networks measure intelligence.

Why Evaluation is the Bottleneck in Decentralized AI

Decentralized AI networks promise permissionless participation and global scale, but they face a structural constraint. Most incentive mechanisms only work well when tasks are deterministic and easily verifiable.

This works for narrow problems like data scraping or compute rental. It breaks down when tasks are subjective, open-ended, or creative.

Without reliable evaluation, networks become vulnerable to collusion, gaming, or stagnation. Progress slows, and innovation becomes constrained by the limits of scoring rules rather than the capability of models.

This is the gap Apex is targeting.

From GANs to Incentives

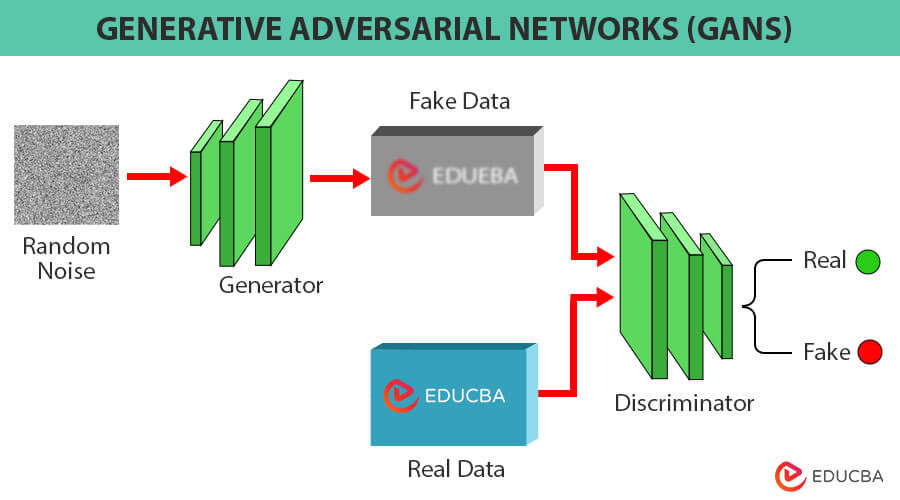

The idea behind Generative Adversarial Mining is inspired by a familiar concept in machine learning.

Generative Adversarial Networks (GAN) improved image generation not by defining perfect metrics, but by forcing models to compete.

Apex applies the same principle to incentives.

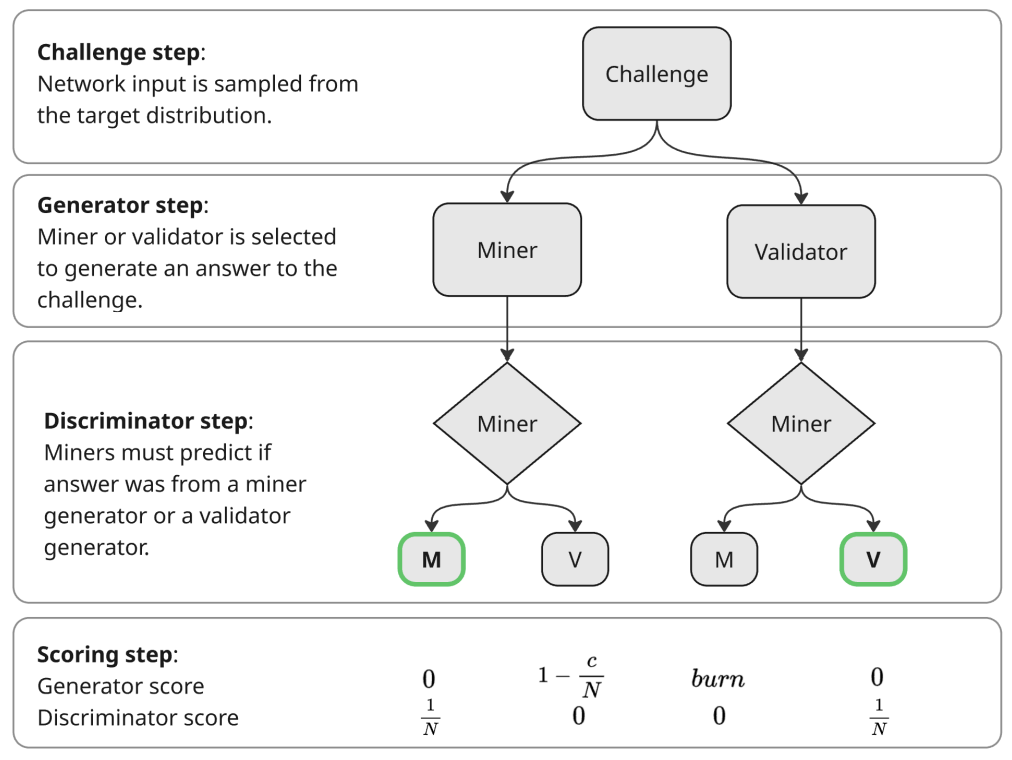

In GAM, participants alternate between two roles. Sometimes they generate outputs, other times they attempt to identify where an output came from.

Rewards are distributed through a strict zero sum game, meaning gains by one participant come directly from losses elsewhere.

There is no handcrafted scoring rubric, there is no fixed definition of quality. Instead, models are rewarded for producing outputs that are indistinguishable from stronger reference systems.

Quality is discovered, not prescribed.

Why This Matters for Bittensor

Bittensor is built around subnets, each defining its own incentive mechanism for producing useful intelligence. Most existing subnets rely on explicit validation logic, which limits the kinds of problems they can support.

GAM changes that.

By merging generation and evaluation into a single adversarial process, Apex demonstrates that subnets can support open-ended learning without centralized oversight or fragile scoring systems.

This approach has several important implications:

First, it is cabal resistant. The zero-sum structure combined with reward burning makes collusion unstable and ultimately unprofitable.

Second, rewards converge quickly to true skill. The research shows that even with a small number of rounds, the system reliably separates strong participants from weak ones.

Third, innovation is preserved. Miners are forced to improve algorithmically rather than simply optimizing against static metrics.

Together, this points toward a more scalable foundation for decentralized intelligence.

Real-World Deployments, Not Just Theory

This work is not purely academic.

Generative Adversarial Mining is already live on Bittensor’s Subnet 1, where it is being used for agentic text generation. Validators operate with higher resource budgets, producing strong reference outputs, while miners compete to approximate that quality under tighter constraints, driving efficiency and distillation.

A similar adversarial structure is also running on BitMind (Subnet 34), focused on media generation and detection. In that setting, the same mechanism produces both better generators and stronger detectors, highlighting how a single incentive design can yield multiple valuable outputs.

These deployments show that GAM is not just a concept, but a working system producing measurable progress.

A Step Toward Trustless Intelligence Measurement

What Apex is exploring goes beyond one subnet or one research paper. If decentralized networks can evaluate subjective intelligence through competition rather than rules, the design space for open AI systems expands dramatically. Problems that were previously too ambiguous or too costly to score become viable.

For Bittensor, this reinforces a core thesis. That intelligence can be produced, measured, and rewarded without central control, as long as incentives are designed correctly.

Generative Adversarial Mining is not the final answer, but it is a meaningful step toward decentralized systems that can learn continuously, resist capture, and improve without being boxed in by fragile metrics.

In a field where evaluation is often the weakest link, Apex has shown that competition itself can become the measurement layer.

Be the first to comment