Contributor: School of Crypto | Cover image credit: DreadBongo

Introduction

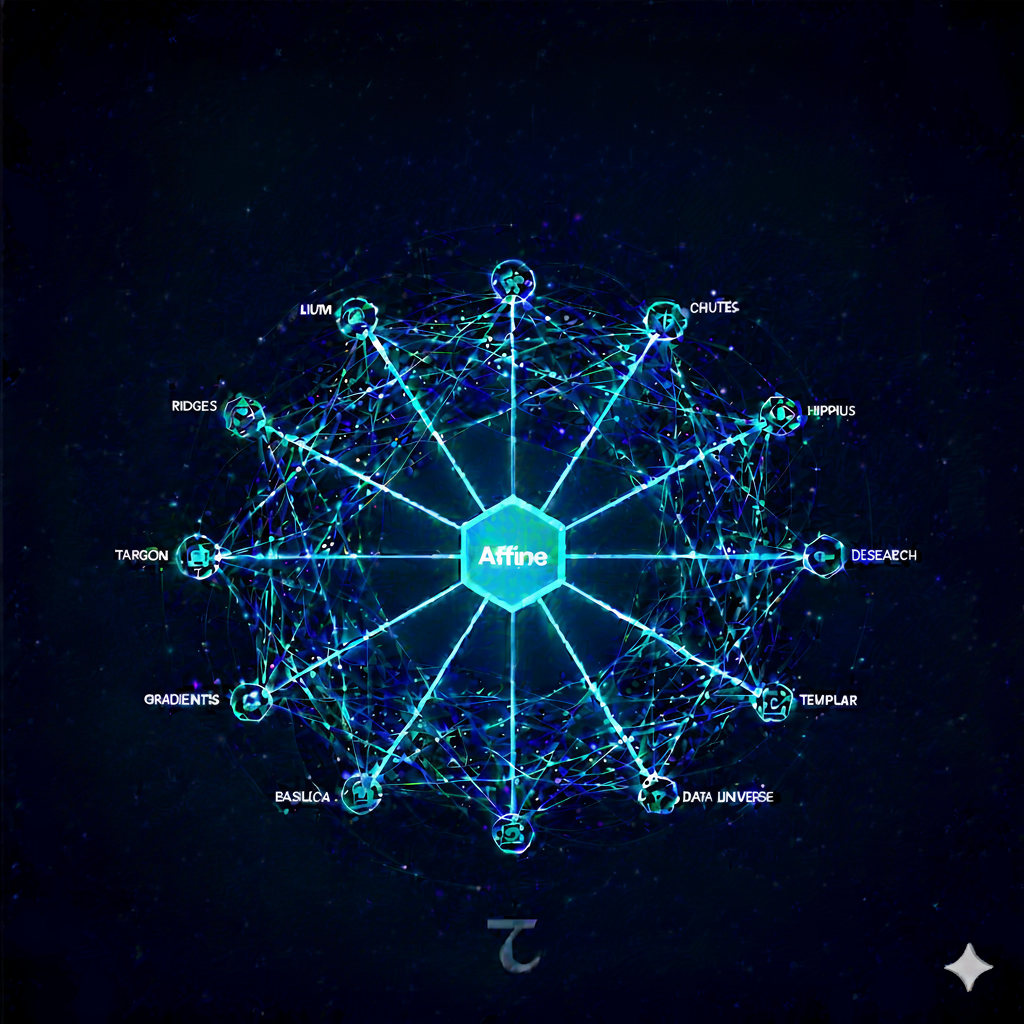

It’s quite an interesting dynamic being one of the 128 subnets in the Bittensor ecosystem. On one hand, you have to compete with all the other projects in order to get the most emissions possible. On the other hand, it’s advantageous to utilize the other subnet services.

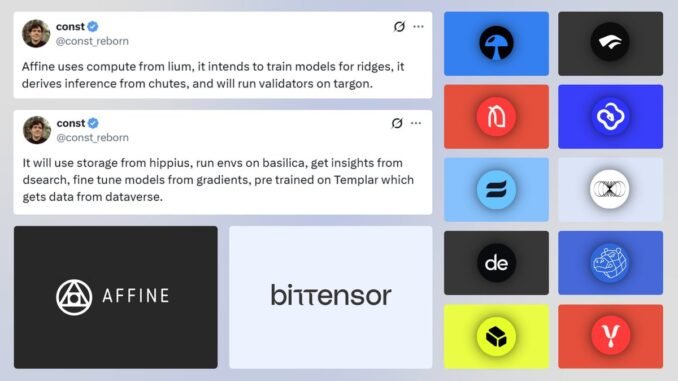

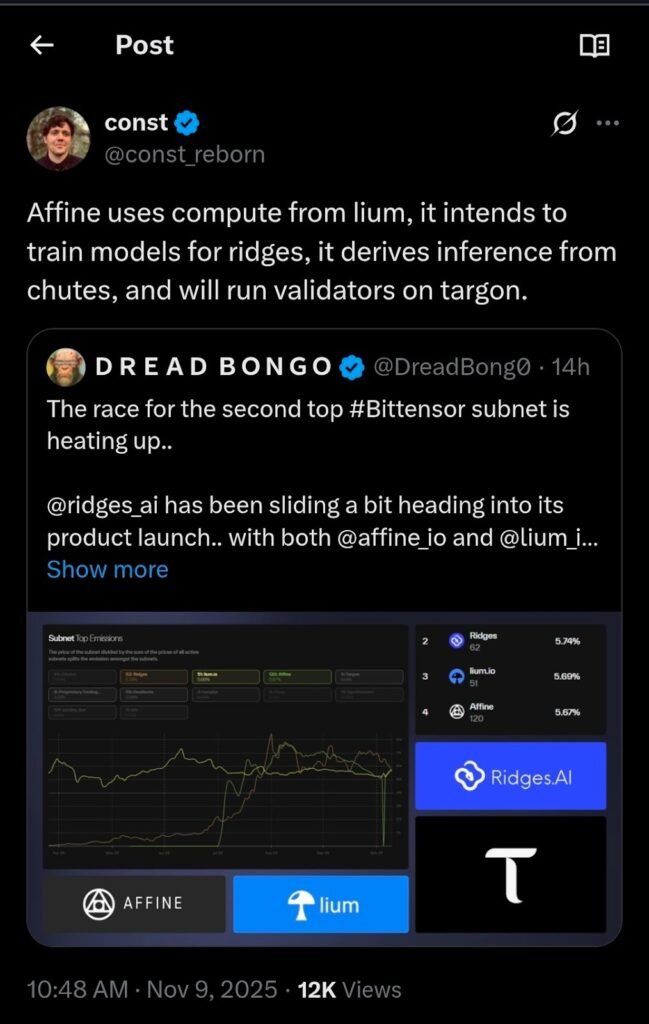

When Const, the Bittensor founder, tweeted about how many subnets he uses and helps with his project Affine, it raised a lot of attention amongst the community.

Affine is a winner-take-all reinforcement learning (RL) subnet in Bittensor that pays miners to submit and improve AI models on tough tasks, like coding or program abduction.

Overall, it channels global, open efforts to speed up AI smarts, making advanced reasoning cheap and breaking through intelligence limits.

Now, let’s break down how Const and Affine are utilizing and helping 10 subnets in the ecosystem.

Lium

He starts off his tweet by saying that he gets his GPU compute from Lium. This subnet provides a more secure and trustless GPU service than anyone in the world. Affine uses Lium’s GPUs in order to power its model training and overall operations.

Lium has hundreds of GPUs for rent at the cheapest prices. Affine now has the best, cheapest, and most trustless GPUs powering their RL models.

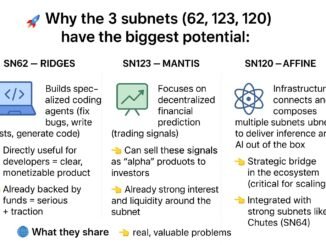

Ridges

Followed by being powered by Lium, Const then said Affine intends to train models for Ridges, the A.I. coding subnet. This is an interesting line of the tweet. Ridges utilizes models off Chutes, the serverless compute subnet, and creates an agent layer to beat benchmarks like SWE.

Affine, being a SOA reinforcement learning model, is offering its services to push Ridges over the top. As many in the community know, Ridges is trying to compete and overtake Claude code, Devin AI, and Cursor as the top AI coding agent in the world. Const lending Affine’s RL model is showing the community that he wants other promising projects to succeed.

Chutes

Affine utilizes Chutes for decentralized model inference by having validators incentivize miners to submit RL models directly to it. These models are load-balanced across distributed GPUs for efficient, serverless execution and made publicly available.

This process enables Affine to evaluate models across diverse RL environments without centralized infrastructure, deriving real-time inference from Chutes’ network of over 60 hosted models at costs up to 85% lower than AWS. Ultimately, this integration allows Affine to advance AI reasoning through scalable, commoditized intelligence while outsourcing compute-intensive tasks.

Targon

Now let’s talk about running validators on subnet #4. Targon is a subnet that provides trusted compute and AI verification. Miners provide verifiable AI responses through LLMs. Validators then check those outputs for accuracy.

With Affine’s RL model, it’ll be one of the most trusted validators for Targon to ensure SOA outputs.

Hippius

Hippius, the storage subnet on Bittensor, is run by the founder of taostats, mogmachine. Using centralized storage options like Dropbox, Google Drive, or Microsoft OneDrive will usually cost an arm and a leg when you have lots of TB to park.

Storing data with Hippius gives Affine 400-2000 times cheaper with the same world-class service. Cheaper, reliable, and SOA storage for Affine on Hippius, check.

Desearch

Now Affine needs to query various insights to Desearch, subnet #22. Miners for the subnet have APIs from resources such as YouTube, Google, Reddit, X, and summarize the raw data.

Affine needs data and specific intel in order to define their RL environments, augment training sets, and validate outputs. Desearch provides all this valuable data in order to keep Affine with real-time data to enhance its RL operation.

Basilica

Running these reinforcement learning environments isn’t cheap, and it takes a ton of compute. Basilica provides high-performance GPU computing power.

Affine enjoys the option of leveraging Basilica’s powerful GPU infrastructure in order to properly train and test its models. All while doing it on a decentralized platform without having to rely on centralized cloud providers.

Gradients

Now that the RL model has been trained and tested in Basilica’s trusted GPU foundation, Affine then sends it off to Gradients, the fine-tuning subnet in Bittensor.

Fine-tuning models is the act of tweaking and enhancing models. Miners on Gradients will compete against each other and churn out a new and improved model. Affine will then receive a better model from Gradients than they originally sent out. Let’s take a step back.

Templar and Data Universe

Before the RL model was fine-tuned by Gradients, it was pre-trained by Templar, the distributed AI training subnet on Bittensor. Additionally, this pre-training was partially powered by Data Universe, the social media data scraping subnet #13.

Essentially, Affine corrals a pre-trained model powered by a robust training subnet in Templar that injects reliable and rich structured data from Data Universe.

Beauty of Interconnectivity

What we are witnessing here is the beauty of interconnectedness in the Bittensor ecosystem. It’s no coincidence that Affine, the reinforcement learning subnet, is leading the pack in joining forces with all of these up-and-coming projects.

And of course, it’s only fitting that the founder of Bittensor would be the pioneer in incorporating this kind of strategy to align with his fellow subnets in building his own.

Competition is inherently innate within Bittensor and its subnets. Const and Affine prove that cooperation amongst each other will ultimately allow everyone to succeed.

Be the first to comment