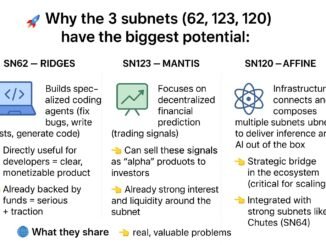

“We will mine reasoning for the world.” — Affine Foundation

Affine turns reasoning into a public commodity. It runs a decentralized reinforcement learning arena where miners train reasoning models and validators reward real improvement. Each cycle separates real progress from luck. Models must outperform across many tasks to survive. The result is continuous, open competition that pushes model quality forward at internet speed.

What Affine is, in short

- A competition platform where models are submitted, evaluated, and ranked across multiple reasoning environments.

- A marketplace with public alpha prices that reflect model value.

- A training-to-deploy loop: Affine trains the models, Chutes (Subnet 64) serves them, users consume them, revenue funds more development.

How it works

- Submission: Miners submit models via Chutes.

- Validation: Validators evaluate models across diverse environments.

- Ranking: The Pareto frontier determines the best overall models, rewarding those that perform across tasks.

- Iteration: Miners copy and improve the best models. The next cycle repeats.

- Deployment: Winning models are queryable through Chutes. Revenue flows back to fund emissions and further evolution.

The design goals

- General performance over narrow wins

Models must show consistent results across tasks such as coding, deduction, SAT solving, abduction, and web-based tasks. - Anti-gaming

The system is sybil-proof, copy-proof, overfitting-proof, and decoy-proof. One-shot lucky runs are filtered out. - Fast, open iteration

Changes happen in days or weeks rather than months, letting innovation compound quickly.

Live signals and stats (sample snapshot)

- Total models submitted: 173

- Eligible models after filters: 70

- Environments scoring reasoning: 8 (examples: WebShop, TextCraft, SciWorld, BabyAI, ALFWorld, SAT, Deduction, Abduction)

- Sample leaders:

- carl107/affine-bee: thousands of samples, strong WebShop scores

- Pavvav/Affine-world19: top in ALFWorld with 433+ validated samples

- nntoan209/Affine-I3rveZ: rapid ascent into top ranks within days

- Alpha pricing examples: public, dynamic, market-driven (shows which models traders value)

Why this matters

- Directed improvement

Teams can steer the competition toward specific goals. Want better code generation? Focus validation there. Want theorem proving? Point Affine at that. You get programmable evolution. - Open participation

Thousands of miners can join. Success is meritocratic, not gatekept by large centralized labs. - Faster research cycles

Continuous competition replaces long centralized training cycles with rapid, repeated improvements. - Composability

Winning models are immediately usable by other subnets and apps via Chutes. That drives real utility and revenue.

Structural advantages over centralized systems

- Central labs typically run long, costly experiments and iterate slowly.

- Affine pays only for real, validated gains, encourages thousands of contributors, and produces rapid, repeated progress.

What the leaderboard and metrics prove

- Sustained performance wins. Top models show high sample counts and consistent cross-environment scores.

- Anti-gaming works. One-hit wonders are excluded. Overfit or duplicate identities get filtered out.

- Market engagement. Alpha prices and public stats show capital is already voting on reasoning approaches.

The big picture

Affine is not just another research sandbox. It is an open, economic system that directs reinforcement learning toward measurable goals. If it succeeds, reasoning becomes a public good that anyone can use, buy, and build on. That outcome would increase demand for network tokens and make reasoning an interoperable infrastructure piece across many applications.

If it fails, the experiment still proves how far decentralized coordination can push complex research. Either way, Affine is one of the boldest, most concrete attempts to make continuous, permissionless model improvement a working market.

TL;DR

Affine runs live, permissionless competition for reasoning models. It rewards sustained, cross-task performance, prevents gaming, and turns improvements into public, deployable models. This is directed evolution for reasoning at scale.

Be the first to comment